1 Introduction to AI agents and applications

Large language models have evolved from curiosities to core building blocks for modern software, powering applications that understand, generate, and reason over natural language. This chapter frames the landscape around three archetypes—LLM-powered engines, conversational chatbots, and autonomous or semi-autonomous agents—and explains why building them is hard without the right abstractions. It introduces the LangChain ecosystem (LangChain, LangGraph, and LangSmith) as modular tooling that reduces boilerplate, promotes best practices, and lets teams focus on product logic while safely orchestrating models, data, and tools.

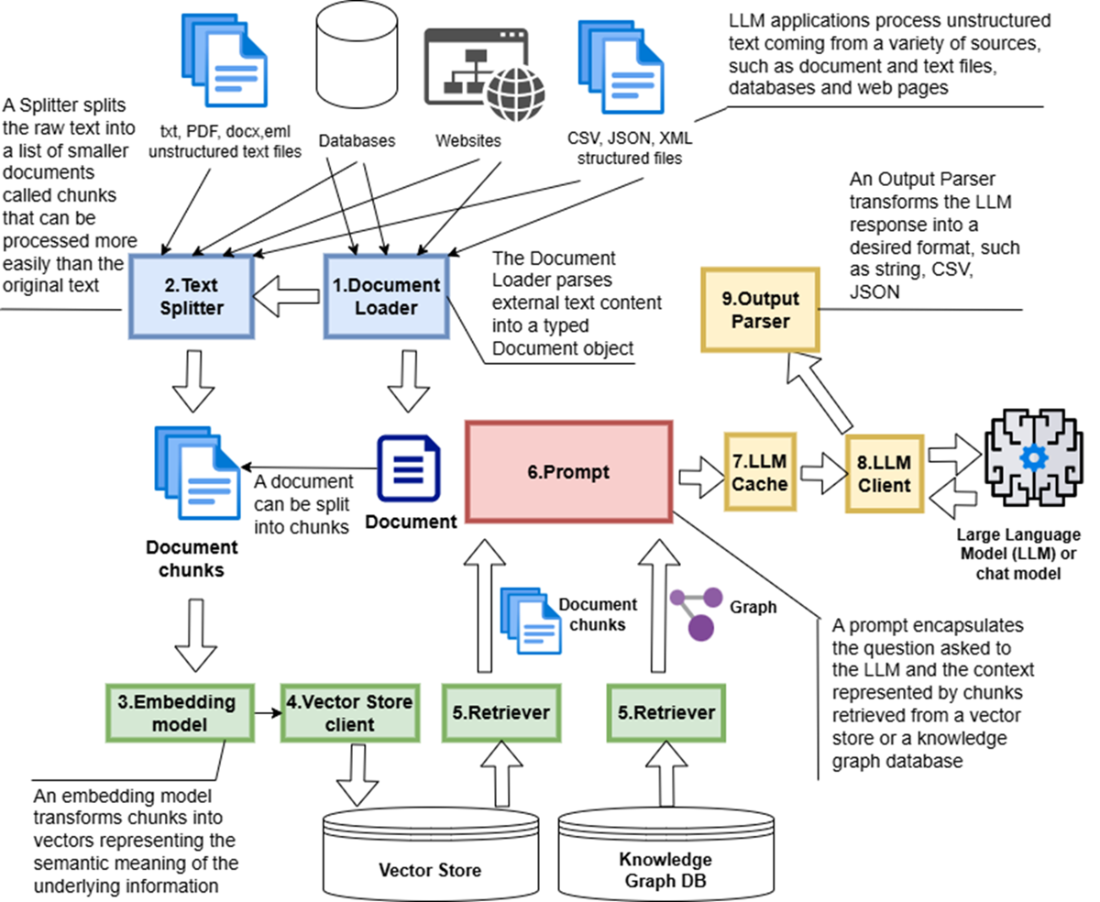

Under the hood, most systems follow a common pattern: ingest data into Documents, split into chunks, embed into vectors, store in a vector database, retrieve relevant context, and craft prompts for an LLM. Retrieval-Augmented Generation anchors answers in trustworthy data, while prompt templates, role messaging, and conversation memory shape chatbot behavior. Agents extend these ideas with iterative tool use, planning, and control flow, often adding human-in-the-loop checks for high-stakes tasks. The chapter outlines LangChain’s principles—modularity, composability, extensibility—and key components (loaders, splitters, embeddings, vector stores, retrievers, prompts, caches, parsers), the Runnable/LCEL pattern for reliable pipelines, and graph-based orchestration with LangGraph. It also highlights the growing standardization of tool access via the Model Context Protocol, which simplifies integrating external capabilities.

To adapt models to real needs, the chapter compares prompt engineering, RAG, and fine-tuning, emphasizing that grounded retrieval often beats retraining for cost, speed, and maintainability, while fine-tuning remains valuable for highly specialized domains. It surveys common LLM use cases and offers guidance on model selection across accuracy, latency, cost, context size, specialization, and openness. Finally, it previews the book’s hands-on path: building engines and RAG chatbots, progressing to advanced LangGraph agents, and instrumenting with LangSmith—equipping readers to design, evaluate, and ship production-grade AI applications.

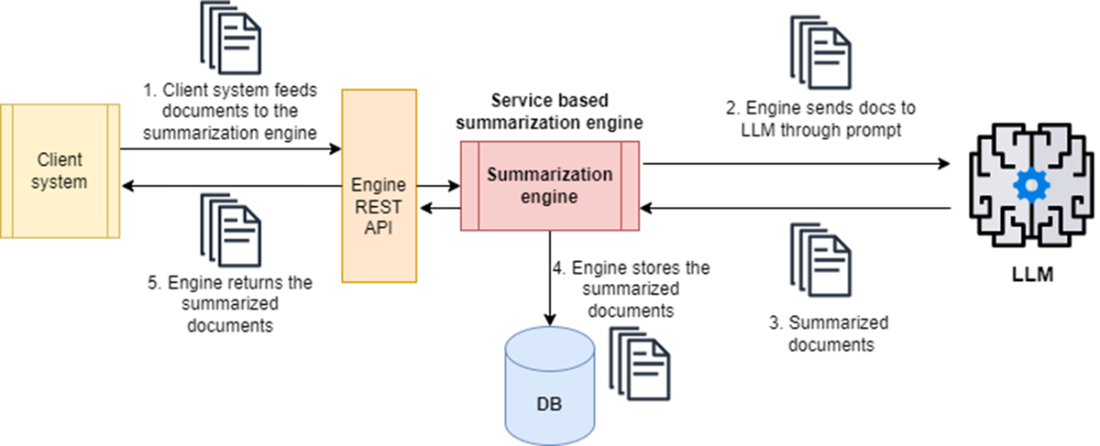

A summarization engine efficiently summarizes and stores content from large volumes of text and can be invoked by other systems through REST API.

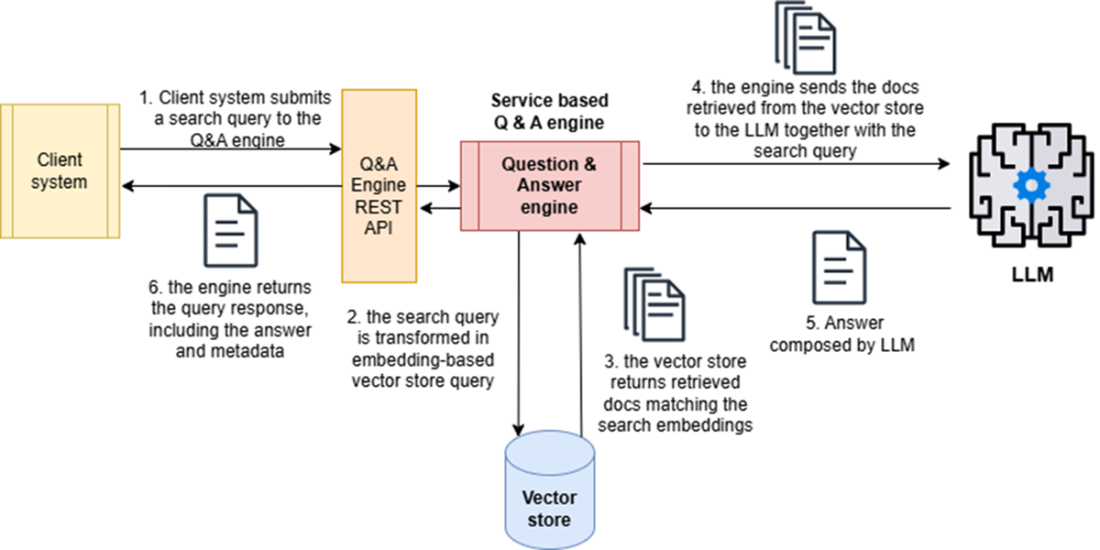

A Q&A engine implemented with RAG design: an LLM query engine stores domain-specific document information in a vector store. When an external system sends a query, it converts the natural language question into its embeddings (or vector) representation, retrieves the related documents from the vector store, and then gives the LLM the information it needs to craft a natural language response.

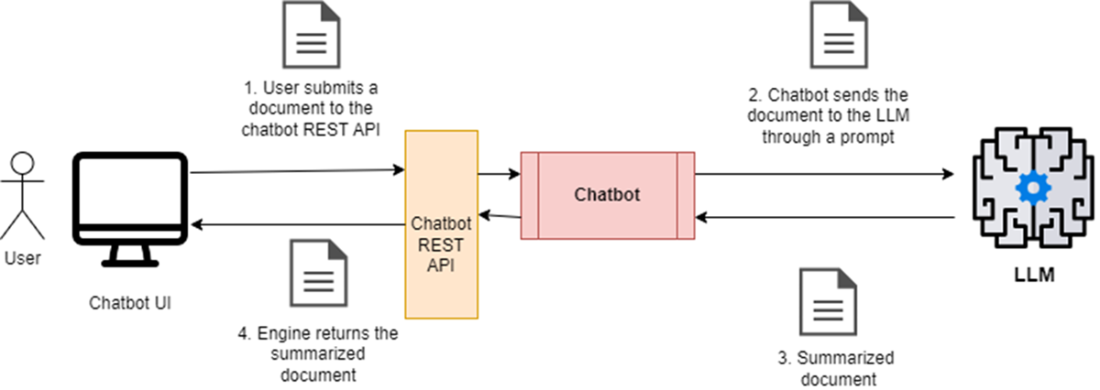

A summarization chatbot has some similarities with a summarization engine, but it offers an interactive experience where the LLM and the user can work together to fine-tune and improve the results.

Sequence diagram that outlines how a user interacts with an LLM through a chatbot to create a more concise summary.

Workflow of an AI agent tasked with assembling holiday packages: An external client system sends a customized holiday request in natural language. The agent prompts the LLM to select tools and formulate queries in technology-specific formats. The agent executes the queries, gathers the results, and sends them back to the LLM, along with the original request, to obtain a comprehensive holiday package summary. Finally, the agent forwards the summarized package to the client system.

LangChain architecture: The Document Loader imports data, which the Text Splitter divides into chunks. These are vectorized by an Embedding Model, stored in a Vector Store, and retrieved through a Retriever for the LLM. The LLM Cache checks for prior requests to return cached responses, while the Output Parser formats the LLM's final response.

Object model of classes associated with the Document core entity, including Document loaders (which create Document objects), splitters (which create a list of Document objects), vector stores (which store Document objects in vector stores) and retrievers (which retrieve Document objects from vector stores and other sources)

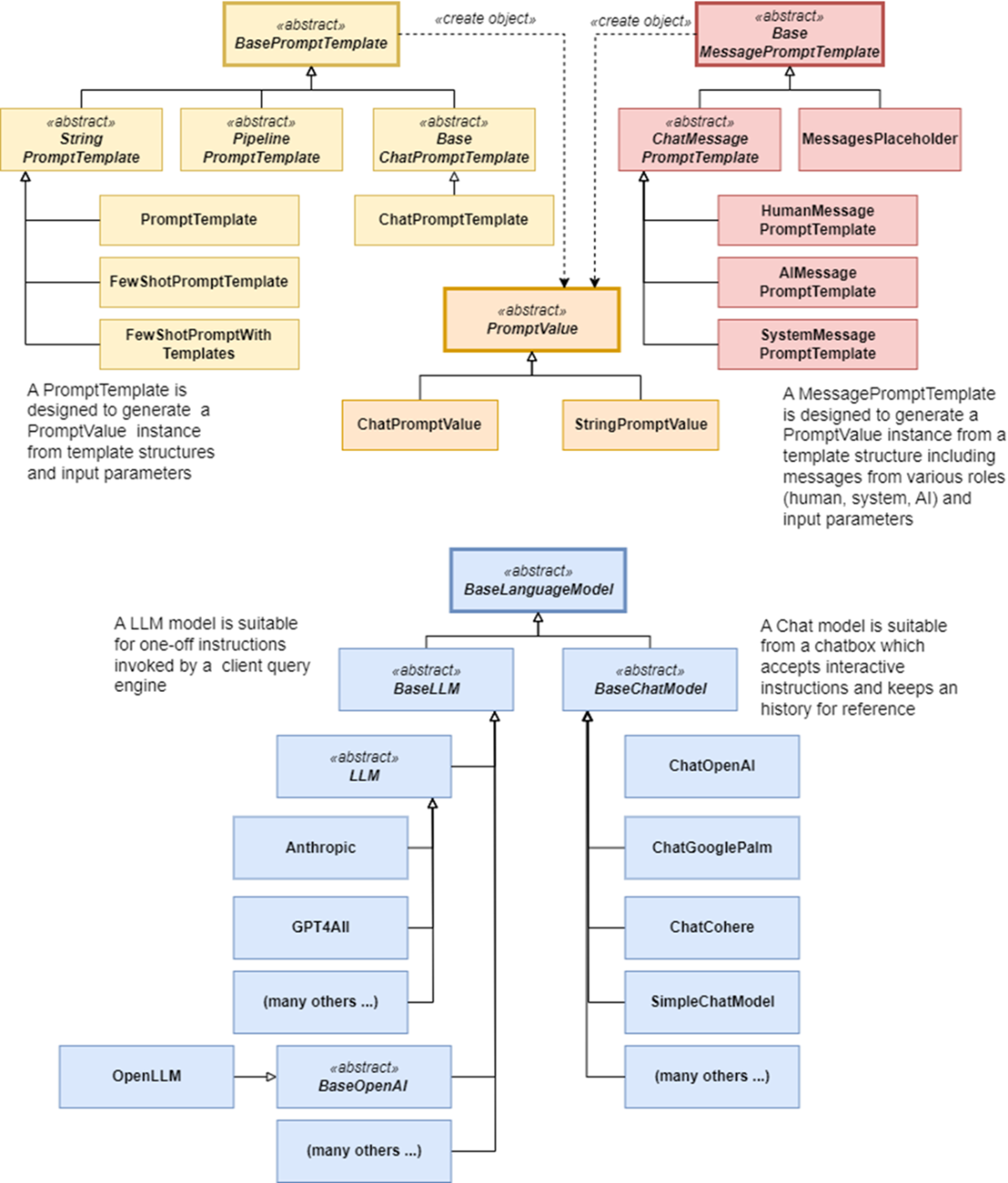

Object model of classes associated with Language Models, including Prompt Templates and Prompt Values

A collection of documents is split into text chunks and transformed into vector-based embeddings. Both text chunks and related embeddings are then stored in a vector store.

Summary

- LLMs have rapidly evolved into core building blocks for modern applications, enabling tasks like summarization, semantic search, and conversational assistants.

- Without frameworks, teams often reinvent the wheel—managing ingestion, embeddings, retrieval, and orchestration with brittle, one-off code. LangChain addresses this by standardizing these patterns into modular, reusable components.

- LangChain’s modular architecture builds on loaders, splitters, embedding models, retrievers, and vector stores, making it straightforward to build engines such as summarization and Q&A systems.

- Conversational use cases demand more than static pipelines. LLM-based chatbots extend engines with dialogue management and memory, allowing adaptive, multi-turn interactions.

- Beyond chatbots, AI agents represent the most advanced type of LLM application.

- Agents orchestrate multi-step workflows and tools under LLM guidance, with frameworks like LangGraph designed to make this practical and maintainable.

- Retrieval-Augmented Generation (RAG) is a foundational pattern that grounds LLM outputs in external knowledge, improving accuracy while reducing hallucinations and token costs.

- Prompt engineering remains a critical skill for shaping LLM behavior, but when prompts alone aren’t enough, RAG or even fine-tuning can extend capabilities further.

AI Agents and Applications ebook for free

AI Agents and Applications ebook for free