1 The rise of AI agents

AI agents extend the longstanding notion of an “agent” in AI: an entity that can act on someone’s behalf. Unlike classic assistants that wait for step-by-step approval, agents exhibit real agency—they interpret goals, reason, plan, and execute multi-step work autonomously through tools. This chapter sets the stage for building practical, LLM-powered agents and agentic systems that connect to databases, APIs, knowledge stores, and full applications using modern frameworks such as the OpenAI Agents SDK and the Model Context Protocol (MCP), helping readers progress from prompt tinkering to production-grade agent architecture.

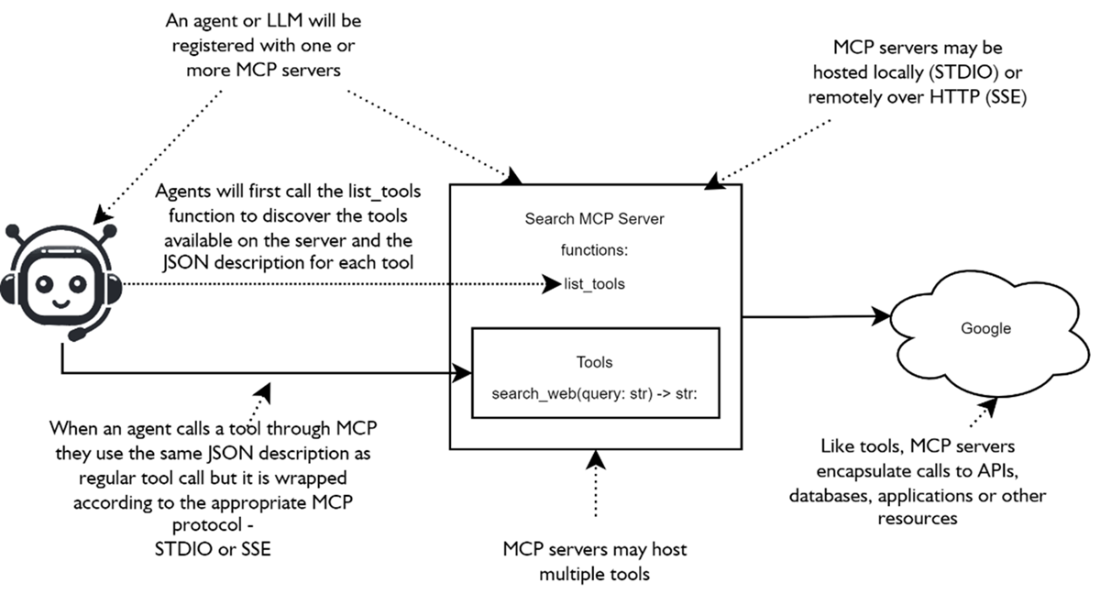

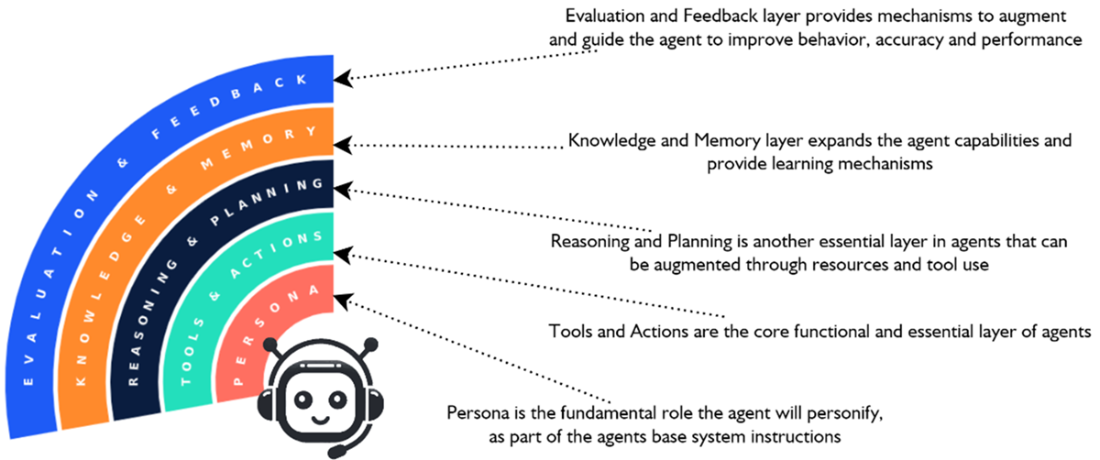

Mechanically, agents decompose goals into tasks mapped to tools and iterate through a sense–plan–act–learn loop, leveraging today’s models’ built-in reasoning and optional planning strategies for greater control. Tools are registered and invoked like functions, often wrapping APIs or external systems; MCP—an open, JSON-RPC–based standard—unifies discovery and use of external tool servers, smoothing integrations across models, improving reliability, and enabling language-agnostic extensibility. The chapter also introduces five functional layers—Persona, Actions & Tools, Reasoning & Planning, Knowledge & Memory (commonly via RAG), and Evaluation & Feedback—that can be selectively combined to shape behavior, ground responses, and harden outputs.

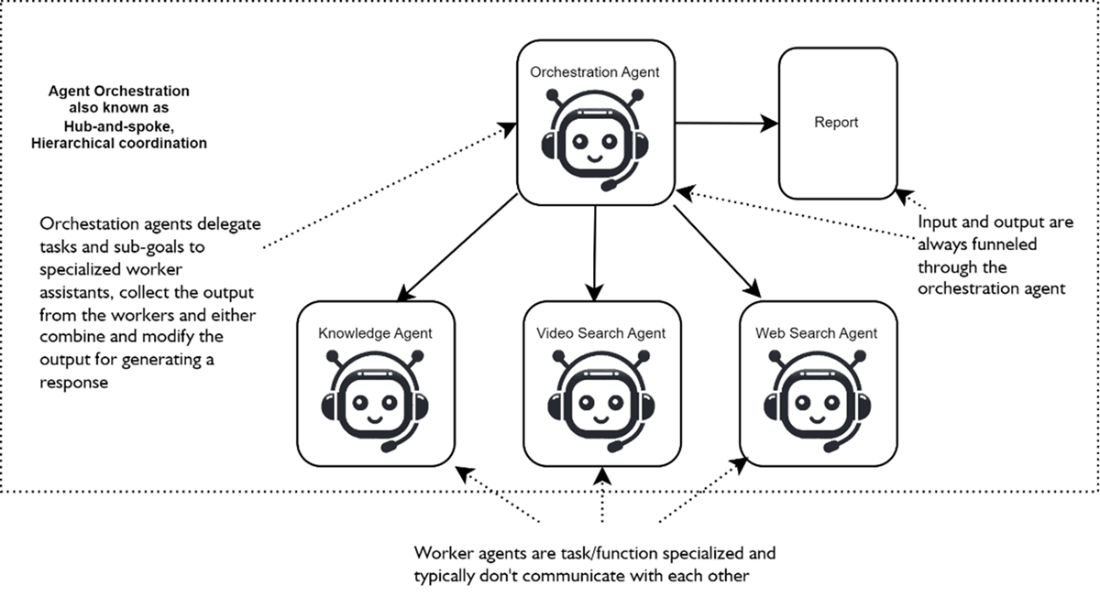

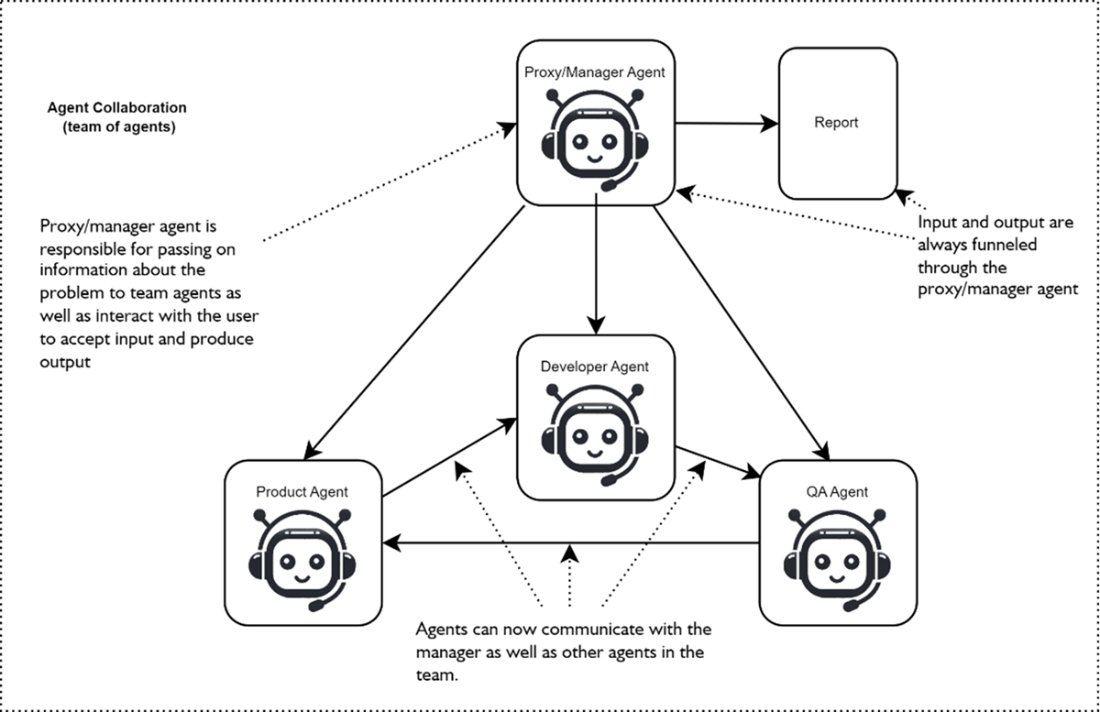

To solve problems that exceed a single agent’s capacity, the chapter surveys multi-agent patterns. Agent-flow (assembly-line) chains specialized agents in a defined sequence for well-scoped, multi-step tasks; hub-and-spoke orchestrations place a central agent in charge of planning and delegation to specialized workers while keeping I/O centralized; and collaborative teams allow peer agents to critique and build on each other’s work, trading efficiency for depth and creativity. These patterns can be mixed; the book emphasizes flow and orchestration with the OpenAI Agents SDK and later explores collaboration-oriented frameworks such as AutoGen and CrewAI.

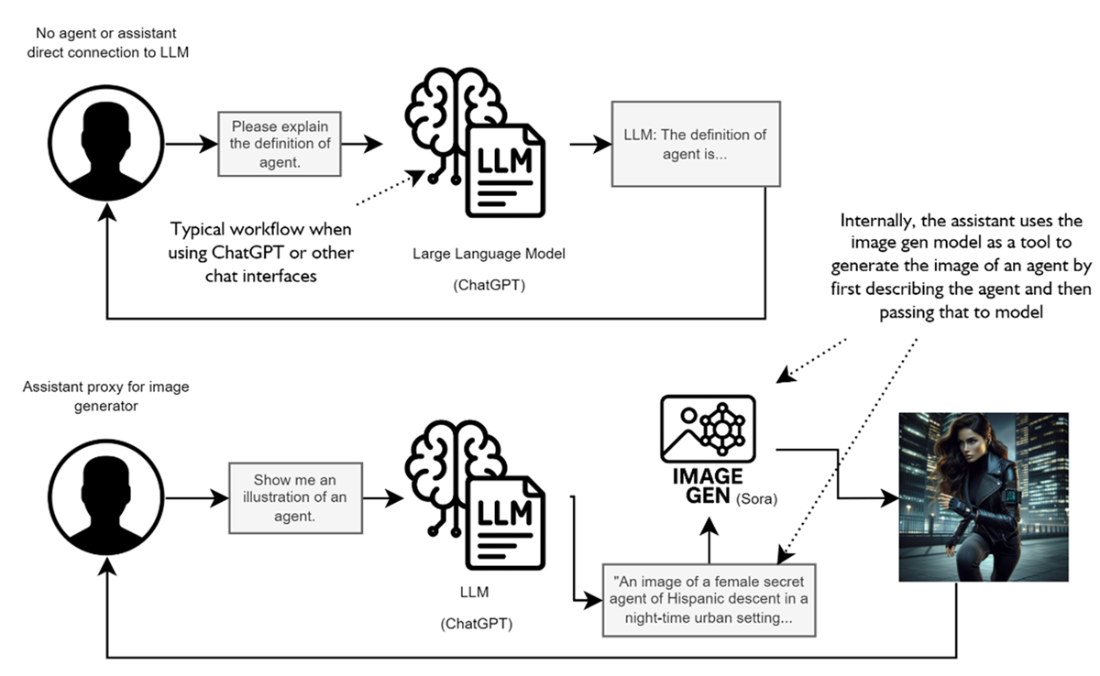

Common patterns for directly communicating with an LLM or an LLM with tools. If you’ve used earlier versions of ChatGPT, you experienced direct interaction with the LLM. No proxy agent or other assistant interjected on your behalf. Today, ChatGPT itself has plenty of tools it uses to help respond from web search, coding and so on, making the current version function like an assistant.

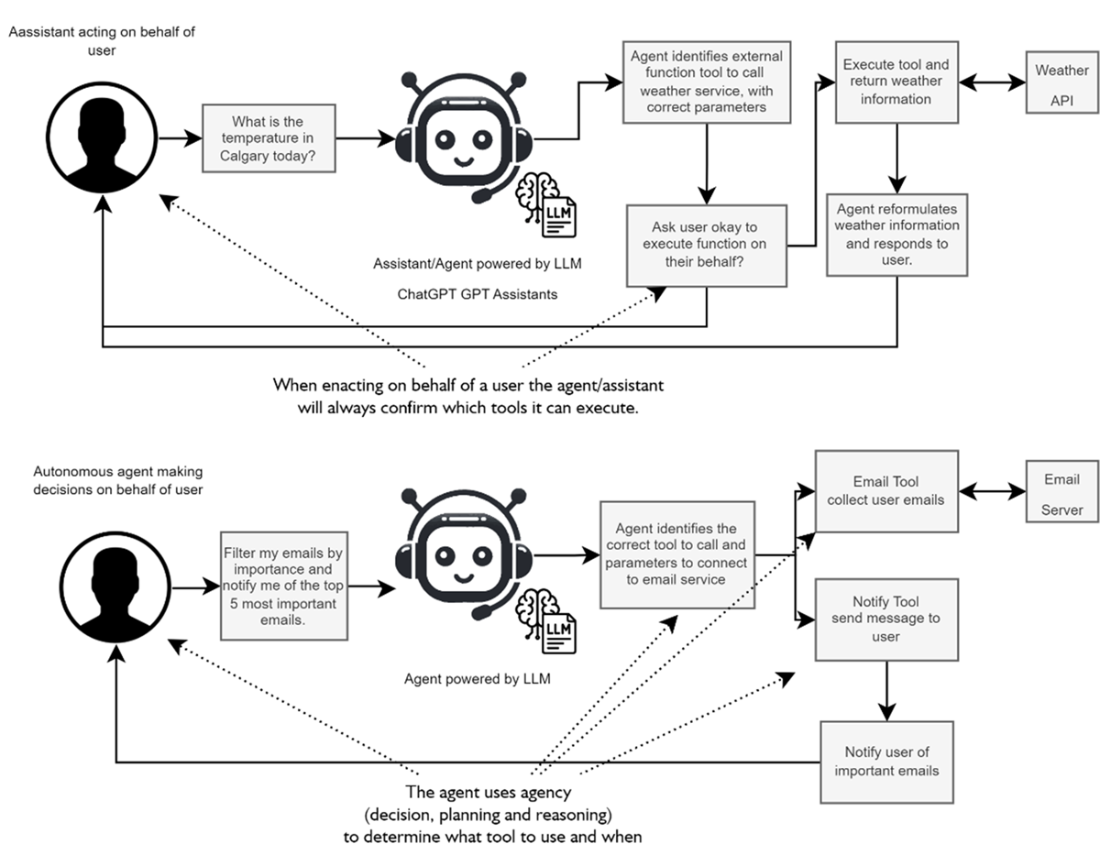

Top: an assistant performs a single or multiple tasks on behalf of a user, where each task requires approval by the user. Bottom: An agent may use multiple tools autonomously without human approval to complete a goal,

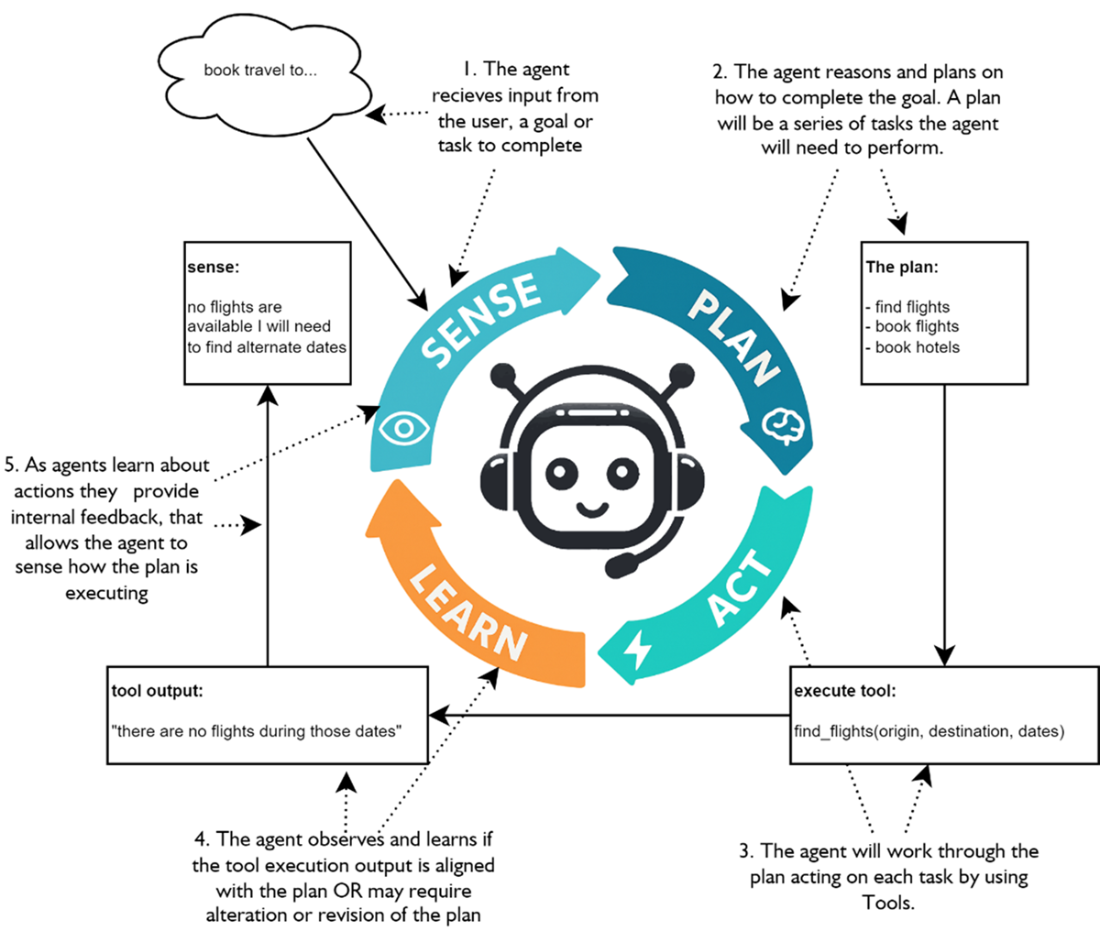

The four-step process agents use to complete goals: –Sense (receive input – goal or feedback) -> Plan (define the task list that completes the goal) -> Act (execute tool defined by task) -> Learn (observe the output of the task and determine if goal is complete or process needs to continue) ->

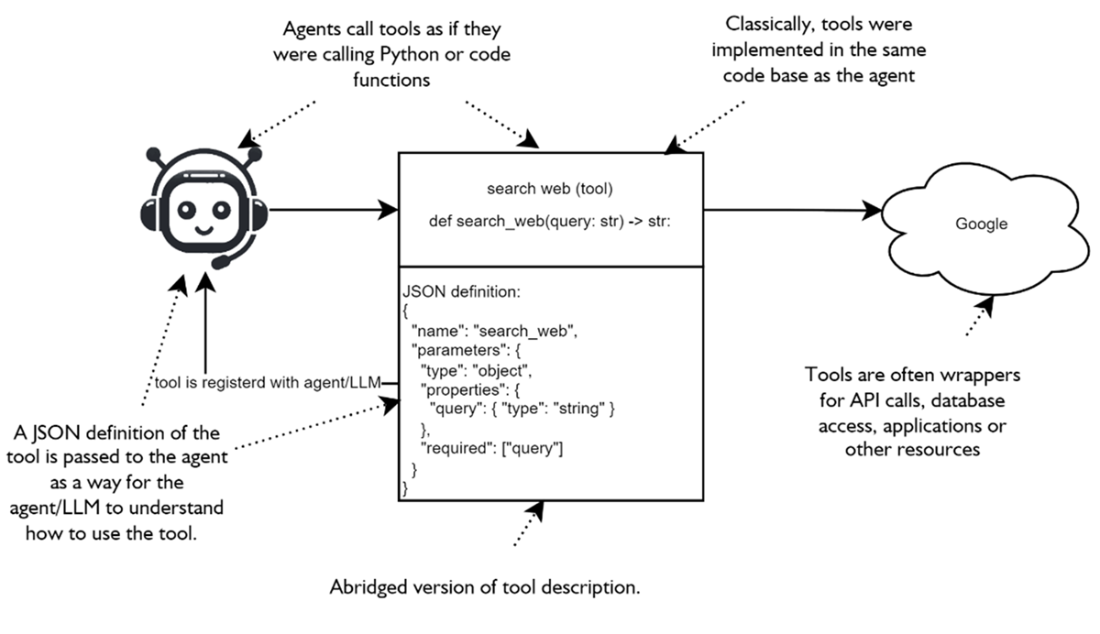

For an agent to use a tool, that tool must first be registered with the agent in the form of a JSON description/definition.l Once the tool is registered, the agent uses that tool in a process not unlike calling a function in Python.

An agent connects to an MCP server to discover the tools it hosts and the description of how to use each tool. When an MCP server is registered with an agent it internally calls list_tools to find all the tools the server supports and their descriptions. Then, like typical tool use internally, it can determine the best way to use those tools based on the respective tool description.

the five functional layers of agents – Persona, Actions & Tools, Reasoning & Planning, Knowledge & Memory, and Evaluation & Feedback

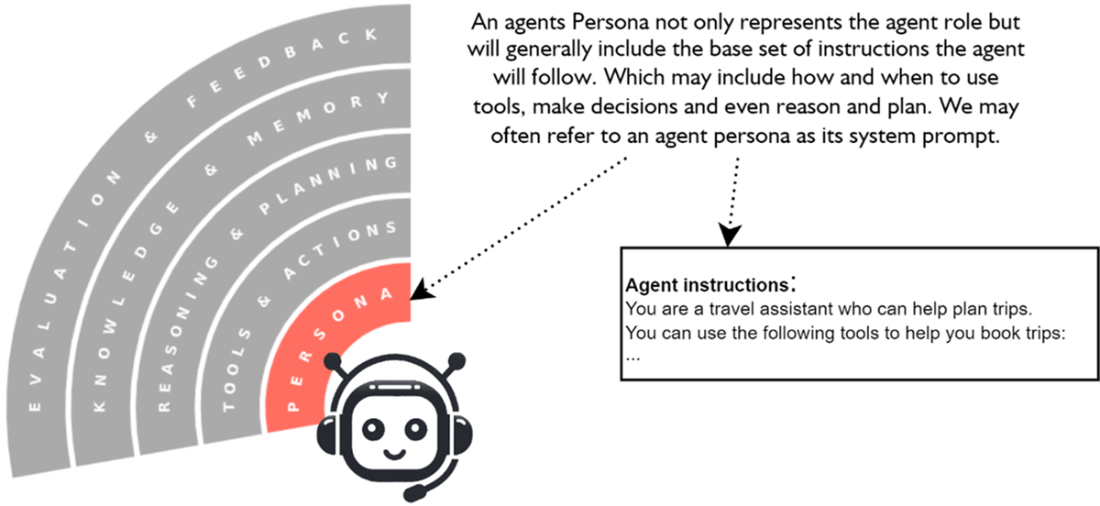

The Persona layer of an agent is the core layer, consisting of the system instructions that define the role of the agent, and how it should complete goals and tasks. It may include how to reason/plan and access knowledge and memory

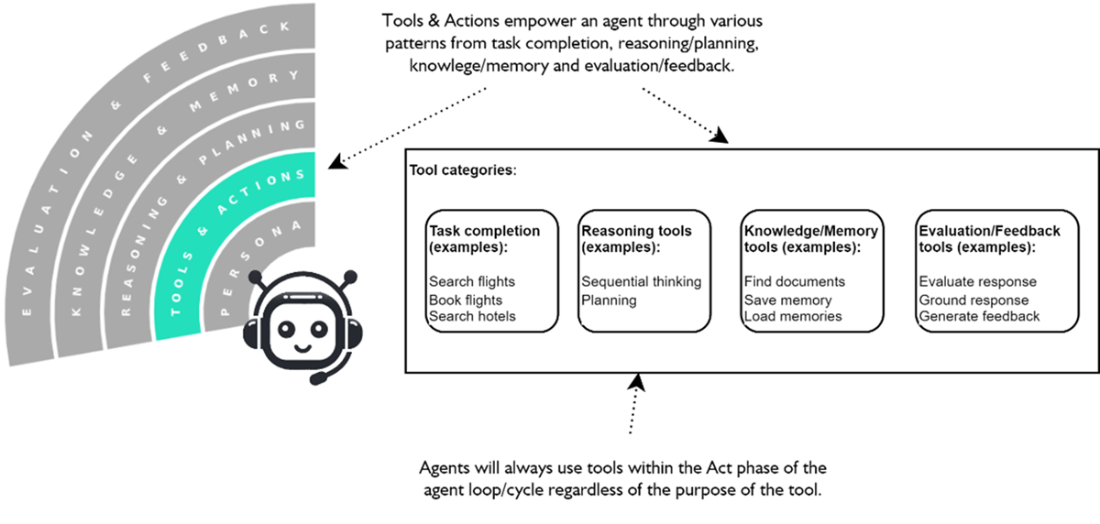

The role of Actions & Tools within the agent, and how tools can also help power the other agent layers. Tools are a core extension of agents but are also fundamental to the functions used in the upper agent layers

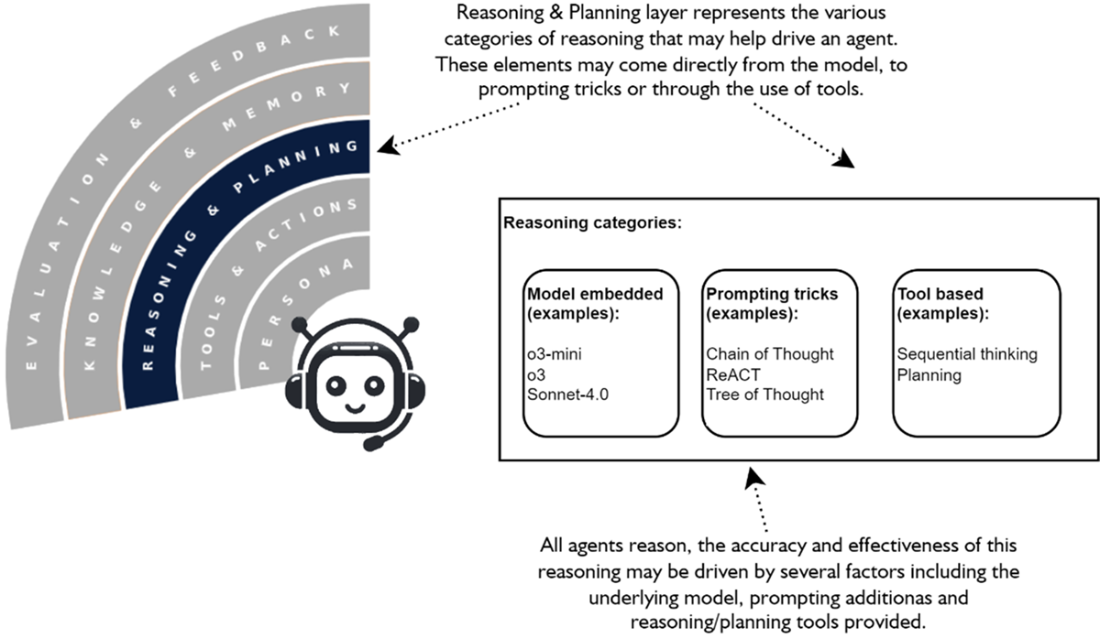

The Reasoning & Planning of agents and how agentic thinking may be augmented. Reasoning may come from many forms, from the underlying model powering the agent, to prompt engineering and even through the use of tools

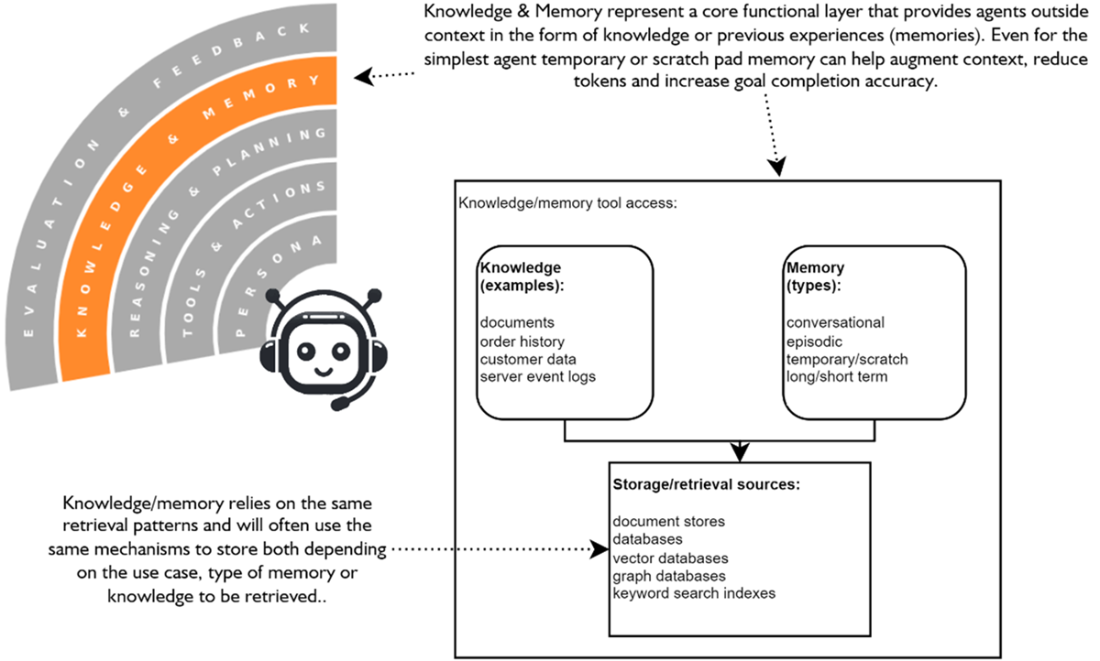

The Knowledge & Memory layer and how it interacts with and uses the same common forms of storage across both types. Agent knowledge represents information the LLM was not initially trained with but is later augmented. Likewise, memories represent past experiences and interactions of the user, agent or even other systems.

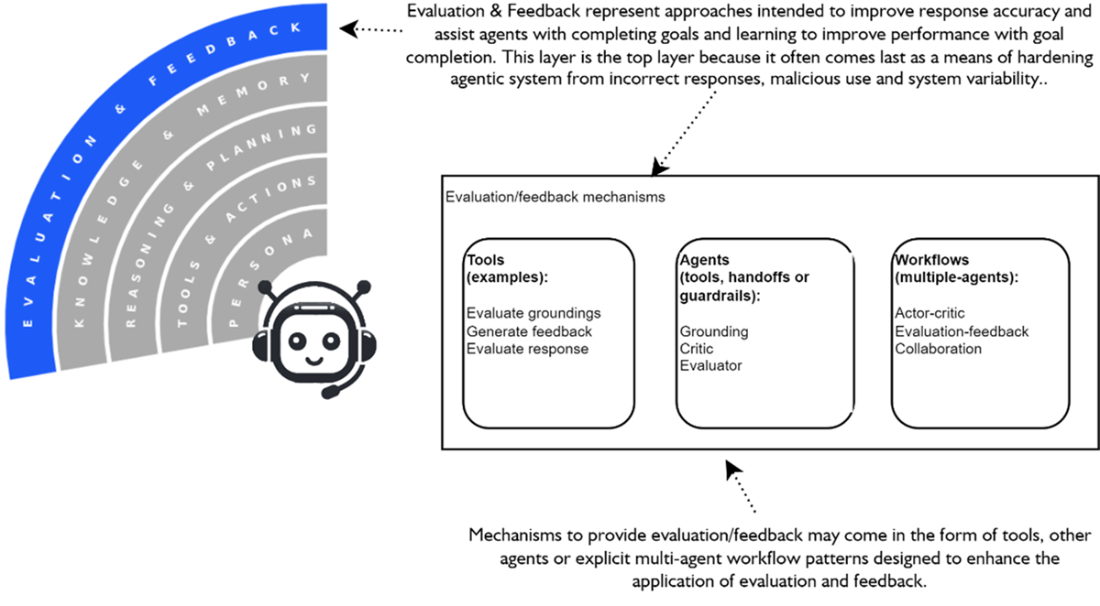

The Evaluation & Feedback layer and the mechanisms used to provide them. From tools which may help evaluate tool use, knowledge retrieval (grounding) and provide feedback, to other agents and workflows that provide similar functionality

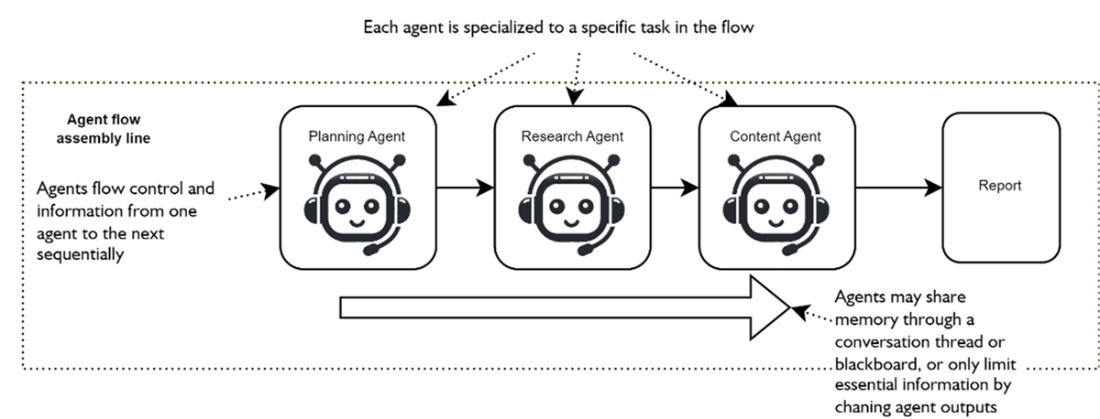

The agent flow pattern of assembly with multiple agents. The flow starts with a planning agent that breaks down the goal into a high-level plan that it then passed to the research agent, which may execute the research tasks on the plan and after completing will pass to the content agent, which is responsible for completing the later tasks of the plan, such as writing a paper based on the research

The agent orchestration pattern, often referred to as hub-and-spoke. In this pattern, a central agent asks as the hub or orchestrator to delegate tasks to each of work agents. Worker agents complete their respective tasks and return them to the hub, which determines when the goal is complete and outputs the results.

A team of collaborative agents. The agent collaboration pattern allows agents to interact as peers to allow back and forth communication from one agent to another. In some cases, a manager agent may work as a user proxy and help keep collaborating agents on track

Summary

- An AI agent has agency, the ability to make decisions, undertake tasks, and act autonomously on behalf of someone or something, powered by large language models connected to tools, memory, and planning capabilities.

- An agents agency provides them the ability to process with an autonomous loop called Sense-Plan-Act-Learn process.

- Assistants use tools to perform single tasks with user approval, while agents have the agency to reason, plan, and execute multiple tasks independently to achieve higher-level goals.

- The four patterns we see LLMs being used in include: direct user interaction with LLMs, assistant proxy (reformulating requests), assistant (tool use with approval), and autonomous agent (independent planning and execution).

- Agents receive goals, load instructions, reason out plans, identify required tools, execute steps in sequence, and return results, all while making autonomous decisions.

- Agents use actions, tool functions (extensions that wrap API calls, databases, and external resources) to act beyond their code base and interact with external systems.

- Model Context Protocol (MCP), developed by Anthropic in November 2024, serves as the "USB-C for LLMs," providing a standardized protocol that allows agents to connect to MCP servers, discover available tools, and use them seamlessly without custom integration code.

- MCP addresses inconsistent tool access, unreliable data responses, fragmented integrations, code extensibility limitations, implementation complexity, and provides easy-to-build standardized servers.

- AI Agent development can be expressed in terms of five functional layers: Persona, Tools & Actions, Reasoning & Planning, Knowledge & Memory, and Evaluation & Feedback.

- The Persona layer represents the core role/personality and instructions an agent will use to undertake goal and task completion.

- The Tools & Actions layer provides the agent with the functionality to interact and manipulate the external world.

- The Reasoning & Planning layer enhances an agent's ability to reason and plan through complex goals that may require trial-and-error iteration.

- The Knowledge & Feedback layer represents external sources of information that can augment the agent’s context with external knowledge or relate past experiences (memories) of previous interactions.

- The Evaluation & Feedback layer represent external agent mechanisms that can assist in improved response accuracy, encourage goal/task learning and increased confidence in overall agent output.

- Multi-agent systems include patterns such as Agent-flow assembly lines (sequential specialized workers), agent orchestration hub-and-spoke (central coordinator with specialized workers), and agent collaboration teams (agents communicating and working together with defined roles).

- The Agent-Flow pattern (sequential assembly line) is the most straightforward multi-agent implementation where specialized agents work sequentially like an assembly line, ideal for well-defined multi-step tasks with designated roles.

- The Agent Orchestration pattern is a hub-and-spoke model where a primary agent plans and coordinates with specialized worker agents, transforming single-agent tool use into multi-agent delegation.

- The Agent Collaboration pattern represents agents in a team-based approach. Agents communicate with each other, provide feedback and criticism, and can solve complex problems through collective intelligence, though with higher computational costs and latency.

- AI agents represent a fundamental shift from traditional programming to natural language-based interfaces, enabling complex workflow automation from prompt engineering to production-ready agent architecture.

AI Agents in Action, Second Edition ebook for free

AI Agents in Action, Second Edition ebook for free