1 Governing Generative AI

Generative AI is transforming everyday work, but its speed, scale, and open-ended behavior create failures that traditional controls can’t reliably prevent or fix. This chapter explains why governance, risk, and compliance (GRC) for GenAI is now a business-critical discipline: incidents are rising, adoption is widespread, and small mistakes can propagate instantly. Because GenAI blends the exploitability of software with the fallibility of humans—and operates probabilistically across unbounded domains—the old “patch later” playbook breaks. The result is a call to move from abstract principles to operational practices that reduce harm without stifling innovation.

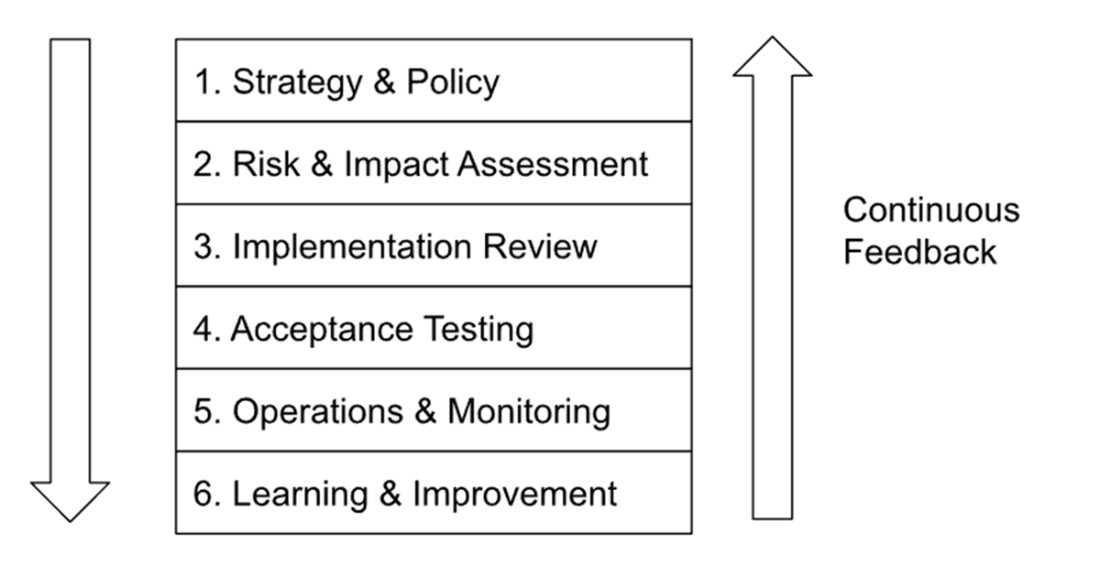

The chapter maps the distinctive risk surface of GenAI—hallucinations, prompt injection and jailbreaks, data poisoning, model extraction, privacy and unlearning challenges, content memorization, and systemic bias—alongside intensifying regulatory and contractual pressures. It proposes a lifecycle, risk-informed governance model (6L-G) that assigns shared responsibilities across the AI supply chain and embeds controls end to end: Strategy & Policy (set principles, ownership, and boundaries), Risk & Impact Assessment (classify obligations and decide go/no-go), Implementation Review (design for security, privacy, transparency), Acceptance Testing (validate safety, robustness, fairness), Operations & Monitoring (guardrails, logging, drift and anomaly detection, incident response), and Learning & Improvement (feedback loops and continuous adaptation). This framework is designed for uncertainty: it favors continuous oversight, clear accountability, and explicit acceptance of residual risk where necessary.

Pragmatically, the chapter emphasizes proportional, “built-in” governance rather than heavyweight bureaucracy. It previews concrete tools and practices—threat modeling, red teaming, AI firewalls and output filters, bias and robustness testing, lineage and decision logging, drift dashboards, model documentation, and post-incident reviews—showing how to integrate them into existing product and risk routines. Illustrative scenarios demonstrate that governance gaps, not just technical flaws, drive many GenAI failures; conversely, disciplined GRC reduces legal, ethical, and reputational exposure while enabling confident deployment. The takeaway is clear: effective GRC is the operating system for responsible, sustainable GenAI innovation.

Generative models such as ChatGPT can often produce highly recognizable versions of famous artwork like the Mona Lisa. While this seems harmless, it illustrates the model's capacity to memorize and reconstruct images from its training data; a capability that becomes a serious privacy risk when the training data includes personal photographs.

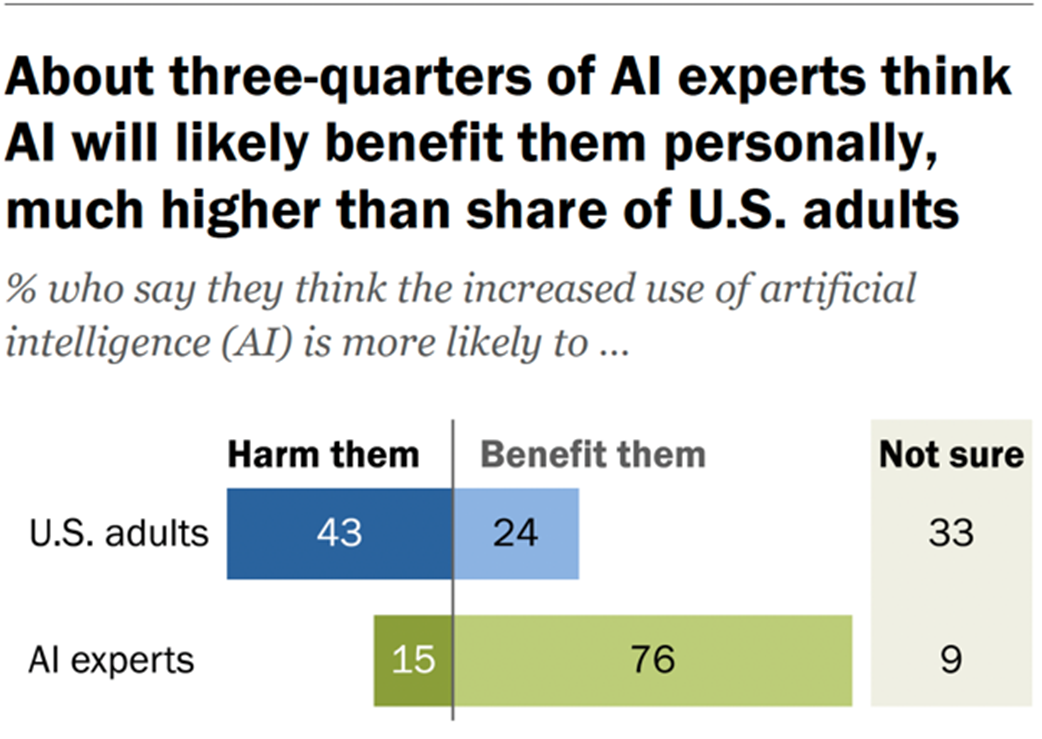

Trust in AI: experts vs general population. Source: Pew Research Center[46].

Classic GRC compared with AI GRC and GenAI GRC

Six Levels of Generative AI Governance. Chapter 2 will expand into what control tasks attach to each checkpoint.

Conclusion: Motivation and Map for What’s Ahead

By now, you should be convinced that governing AI is both critically important and uniquely challenging. We stand at a moment in time where AI technologies are advancing faster than the governance surrounding them. There’s an urgency to act: to put frameworks in place before more incidents occur and before regulators force our hand in ways we might not anticipate. But there’s also an opportunity: organizations that get GenAI GRC right will enjoy more sustainable innovation and public trust, turning responsible AI into a strength rather than a checkbox.

In this opening chapter, we reframed GRC for generative AI not as a dry compliance exercise, but as an active, risk-informed, ongoing discipline. We introduced a structured governance model that spans the AI lifecycle and multiple layers of risk, making sure critical issues aren’t missed. We examined real (and realistic) examples of AI pitfalls: from hallucinations and prompt injections to model theft and data deletion dilemmas. We have also provided a teaser of the tools and practices that can address those challenges, giving you a sense that yes, this is manageable with the right approach.

As you proceed through this book, each chapter will dive deeper into specific aspects of AI GRC using case studies. We’ll tackle topics like establishing a GenAI Governance program (Chapter 2). We will then address different risk areas such as security & privacy (Chapter 3) and trustworthiness (Chapter 4). We’ll also devote time to regulatory landscapes, helping you stay ahead of laws like the EU AI Act, and to emerging standards (you’ll hear more about ISO 42001, NIST, and others). Along the way, we will keep the tone practical – this is about what you can do, starting now, in your organization or projects, to make AI safer and more reliable.

By the end of this book, you'll be equipped to:

- Clearly understand and anticipate GenAI-related risks.

- Implement structured, proactive governance frameworks.

- Confidently navigate emerging regulatory landscapes.

- Foster innovation within a secure and ethically sound AI governance framework.

Before we move on, take a moment to reflect on your own context. Perhaps you are a product manager eager to deploy AI and thinking about how the concepts here might change your planning. Or you might be an executive worried about AI risks and consider where your organization has gaps in this new form of governance. Maybe you are a compliance professional or lawyer and ponder how a company’s internal GRC efforts could meet or fall short of your expectations. Wherever you stand, the concepts in this book aim to bridge the gap between AI’s promise and its risks, giving you the knowledge to maximize the former and mitigate the latter. By embracing effective AI governance now, you not only mitigate risks. You position your organization to lead responsibly in the AI era.

AI Governance ebook for free

AI Governance ebook for free