1 The world of the Apache Iceberg Lakehouse

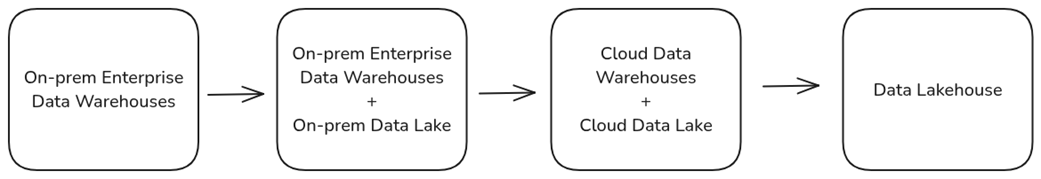

Modern data architecture has evolved through waves of OLTP systems, enterprise and cloud data warehouses, and Hadoop-era data lakes, each trying to balance performance, cost, governance, and flexibility. Warehouses delivered fast analytics but were costly and rigid, while lakes offered cheap, scalable storage but struggled with consistency, schema management, and query performance—often devolving into “data swamps.” The lakehouse emerged to merge these strengths: warehouse-like reliability and performance with the openness, interoperability, and cost-efficiency of data lakes. This chapter frames that evolution and explains why the lakehouse paradigm has become the preferred approach for analytics and AI-ready platforms.

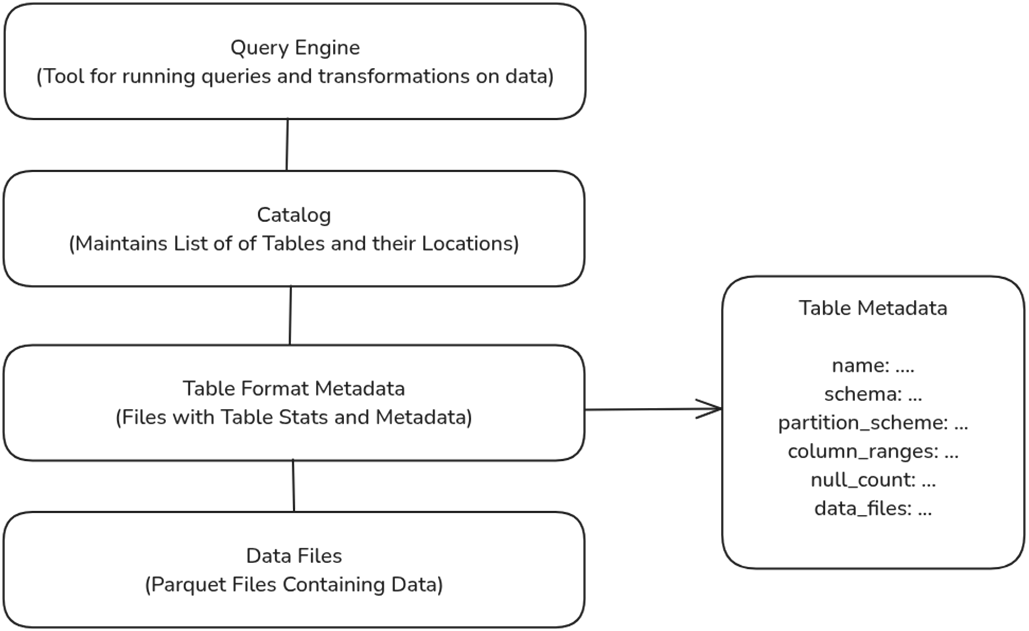

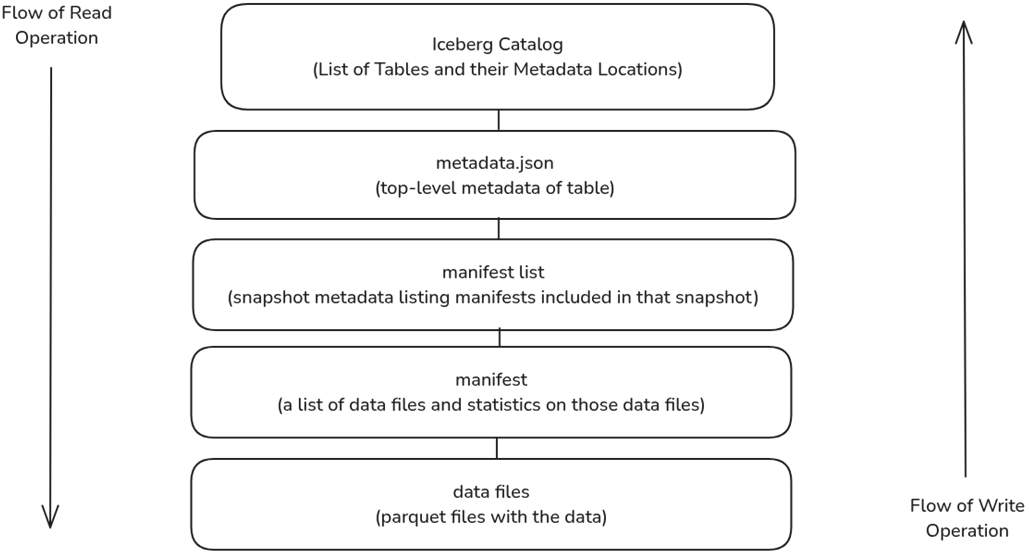

Apache Iceberg is presented as the key enabler of the lakehouse: an open, vendor-agnostic table format that turns files in object storage into reliable, high-performance analytical tables. It introduces a layered metadata model (table metadata, manifest lists, and manifests) that enables pruning and fast planning, supports ACID transactions for safe multi-writer operations, and delivers seamless schema and partition evolution, time travel, and hidden partitioning to prevent accidental full scans. By standardizing how datasets are stored and discovered, Iceberg lets multiple engines work on a single canonical copy of data, reducing ETL and duplication while improving governance and consistency. Compared with alternatives, Iceberg stands out for its flexible partitioning features, broad and growing ecosystem integrations, and community-led governance.

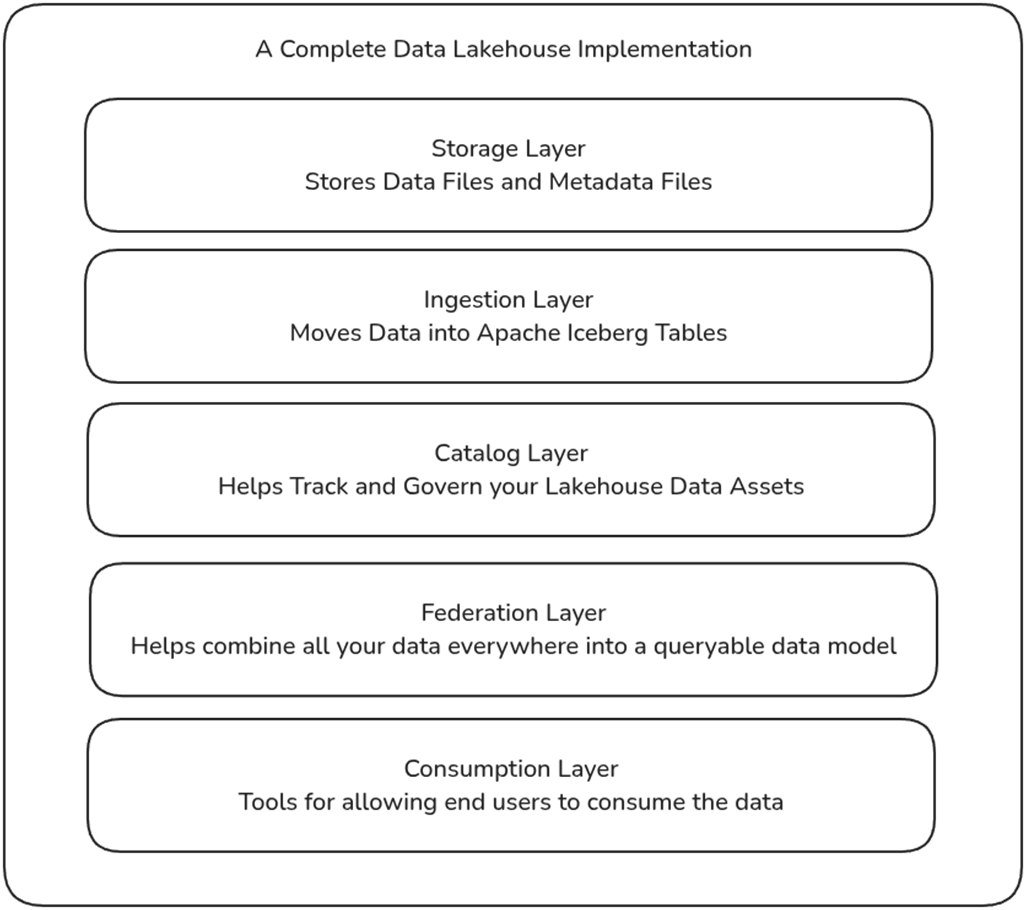

The chapter also outlines the modular components of an Iceberg lakehouse—storage, ingestion, catalog, federation, and consumption—and how this design allows independent scaling, better cost control, and reduced vendor lock-in. Storage keeps data and metadata in durable, low-cost object stores; ingestion supports batch and streaming into Iceberg tables; catalogs provide the entry point and governance; federation unifies and accelerates access across sources; and the consumption layer powers BI, AI, and applications. Organizations adopt Iceberg lakehouses to consolidate data into an open, interoperable platform that delivers performance and governance without sacrificing flexibility. While successful implementations require thoughtful integration with engines and catalogs, the result is a scalable, cost-efficient, and AI-ready architecture built on a single source of truth.

The evolution of data platforms from on-prem warehouses to data lakehouses.

The role of the table format in data lakehouses.

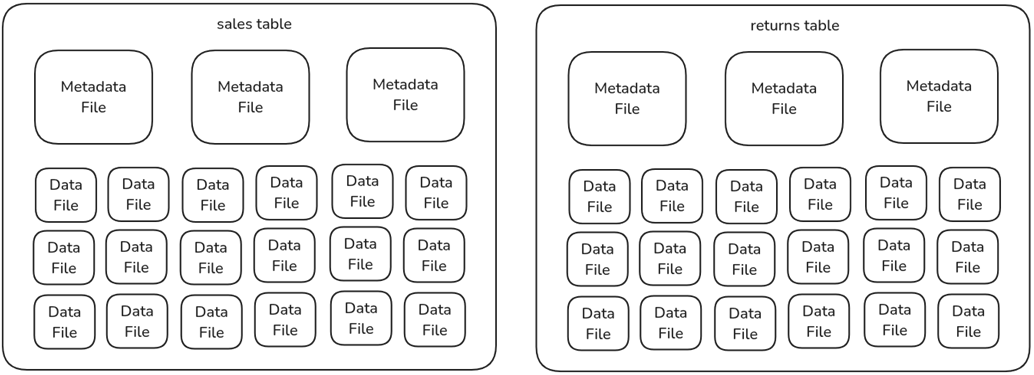

The anatomy of a lakehouse table, metadata files, and data files.

The structure and flow of an Apache Iceberg table read and write operation.

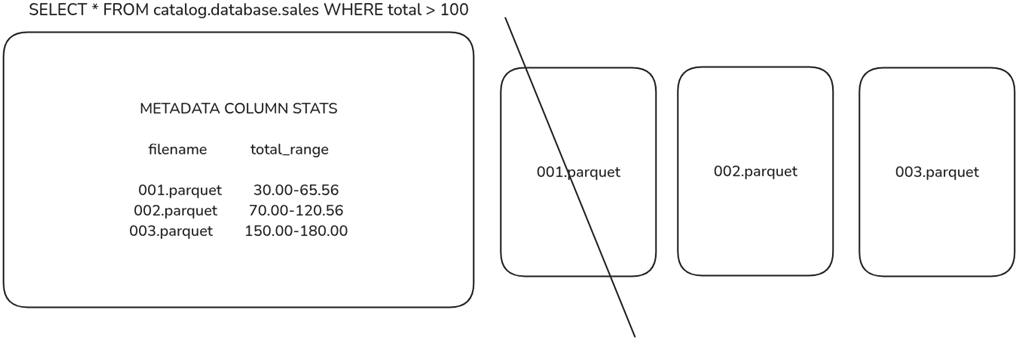

Engines use metadata statistics to eliminate data files from being scanned for faster queries.

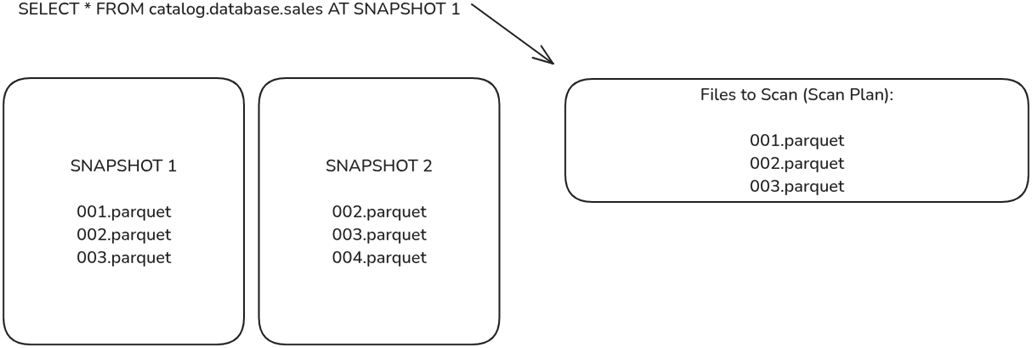

Engines can scan older snapshots, which will provide a different list of files to scan, enabling scanning older versions of the data.

The components of a complete data lakehouse implementation

Summary

- Data lakehouse architecture combines the scalability and cost-efficiency of data lakes with the performance, ease of use, and structure of data warehouses, solving key challenges in governance, query performance, and cost management.

- Apache Iceberg is a modern table format that enables high-performance analytics, schema evolution, ACID transactions, and metadata scalability. It transforms data lakes into structured, mutable, governed storage platforms.

- Iceberg eliminates significant pain points of OLTP databases, enterprise data warehouses, and Hadoop-based data lakes, including high costs, rigid schemas, slow queries, and inconsistent data governance.

- With features like time travel, partition evolution, and hidden partitioning, Iceberg reduces storage costs, simplifies ETL, and optimizes compute resources, making data analytics more efficient.

- Iceberg integrates with query engines (Trino, Dremio, Snowflake), processing frameworks (Spark, Flink), and open lakehouse catalogs (Nessie, Polaris, Gravitino), enabling modular, vendor-agnostic architectures.

- The Apache Iceberg Lakehouse has five key components: storage, ingestion, catalog, federation, and consumption.

Architecting an Apache Iceberg Lakehouse ebook for free

Architecting an Apache Iceberg Lakehouse ebook for free