1 What are LLM Agents and Multi-Agent Systems?

Large language models can propose plans in natural language but cannot execute them on their own. This chapter introduces LLM agents—systems that translate an LLM’s intentions into concrete actions by orchestrating tools—and explains why this extra layer is necessary. It also introduces multi-agent systems (MAS), where multiple specialized LLM agents collaborate on different parts of a task, and sets expectations for a hands-on journey toward understanding, building, and evaluating these systems from the ground up.

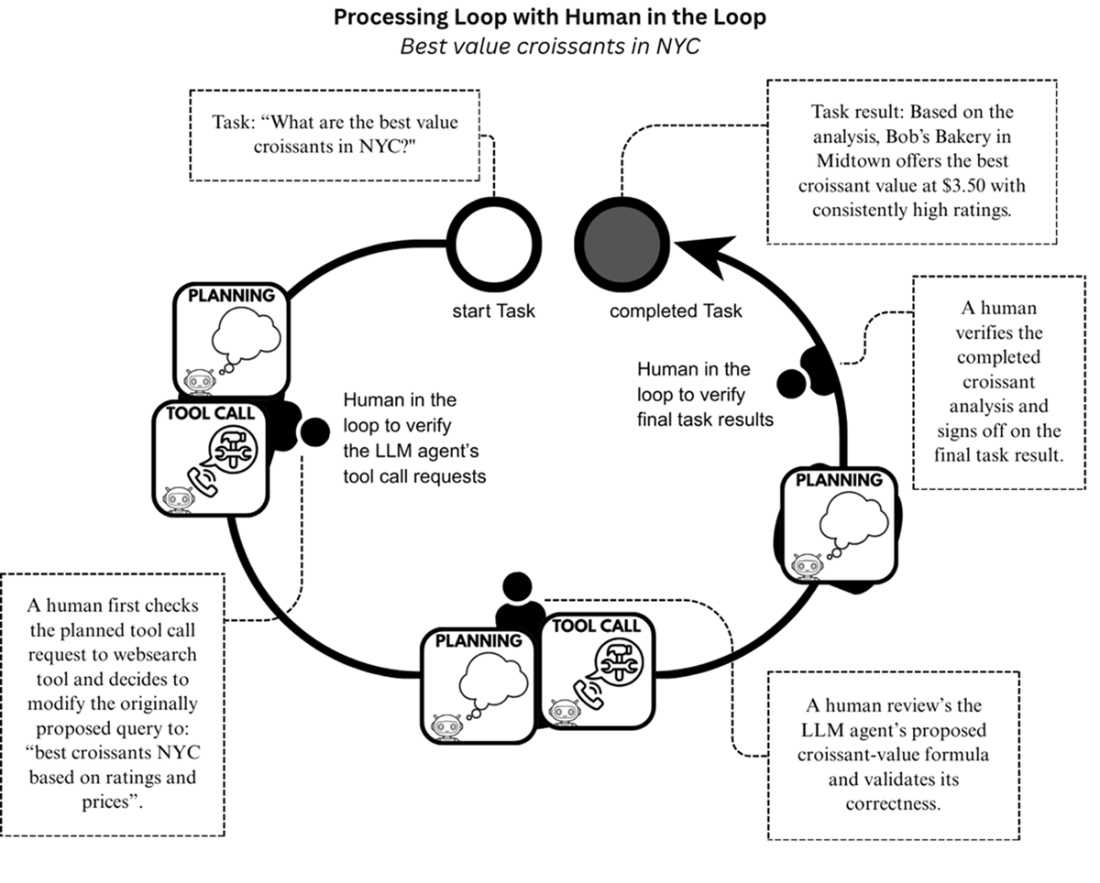

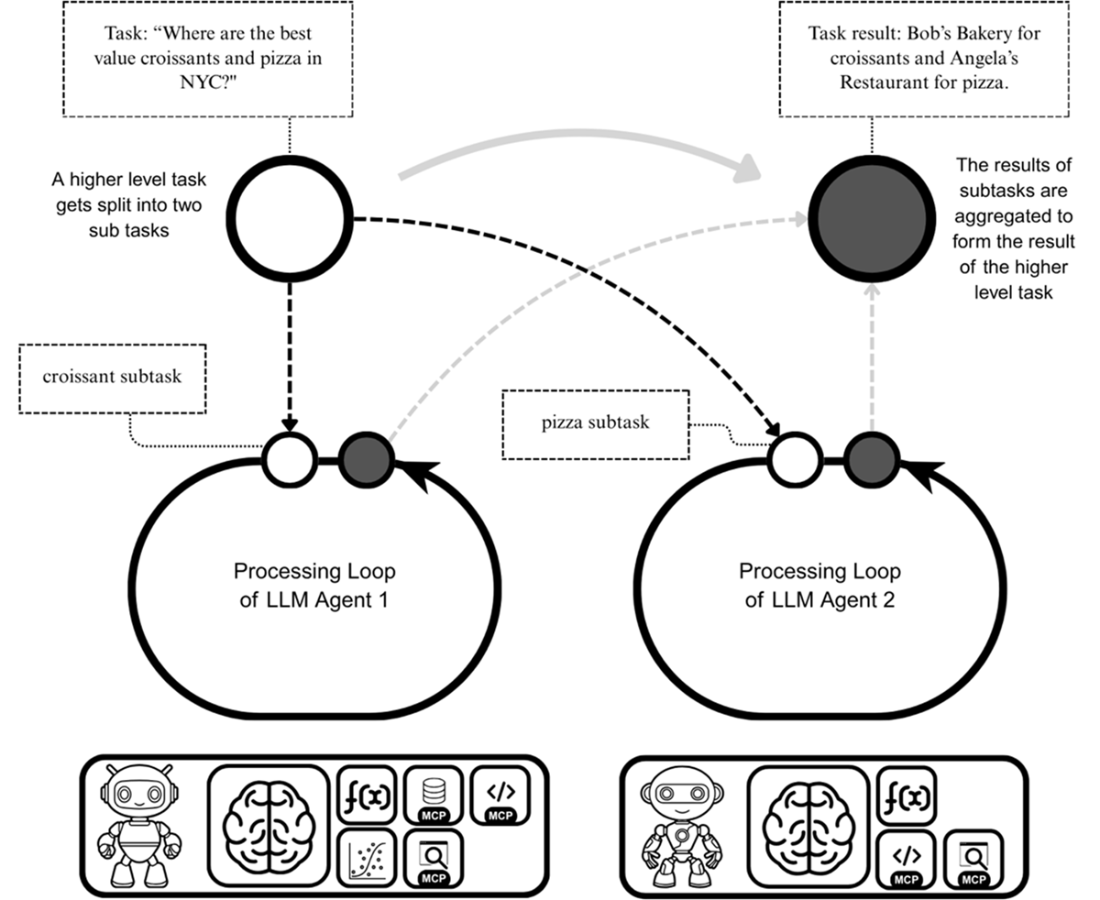

The chapter explains how agents work by leveraging two prerequisite LLM capabilities: planning and tool-calling. Within an iterative processing loop, an agent formulates and adapts plans, issues structured tool calls, ingests results, and progresses through sub-steps until completion or a stopping condition. Enhancements that improve reliability and performance include memory modules (to reuse past results and trajectories) and human-in-the-loop checkpoints (to review or correct critical steps). The chapter also highlights emerging standards: the Model Context Protocol (MCP) for integrating third‑party tools and resources, and the Agent2Agent (A2A) protocol for inter-agent collaboration, enabling heterogeneous agents to coordinate effectively.

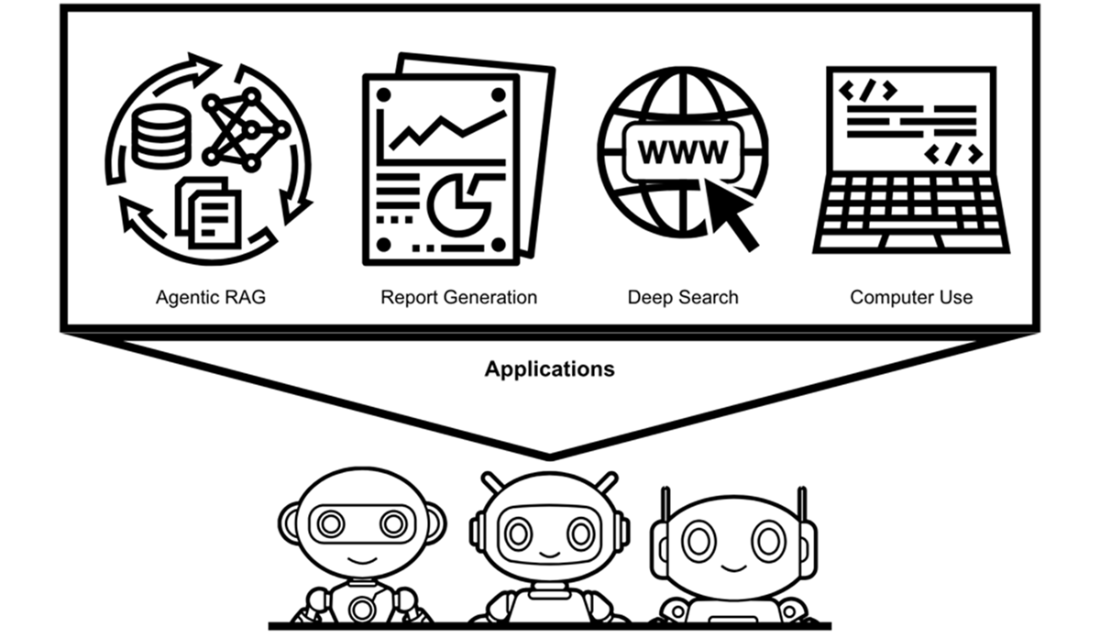

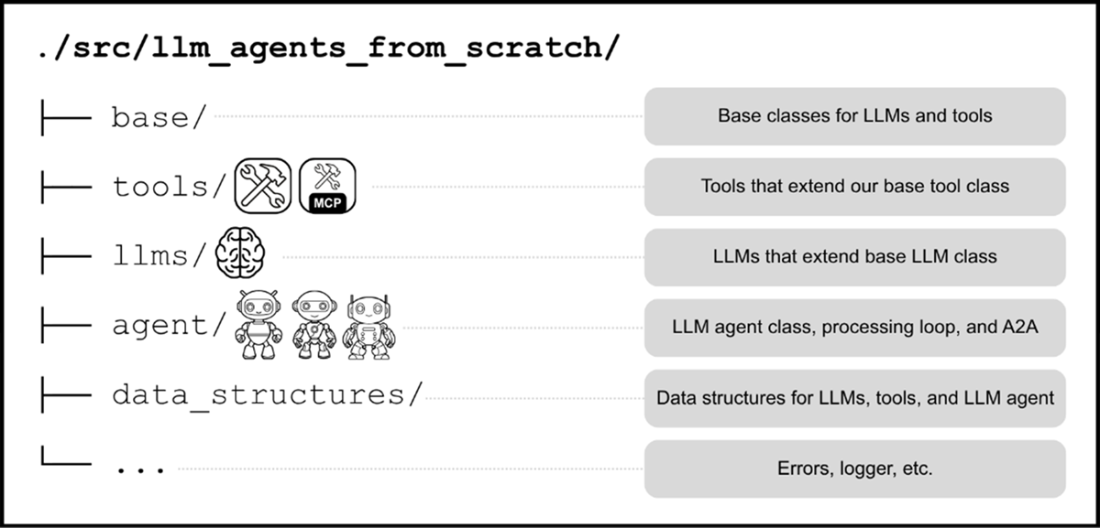

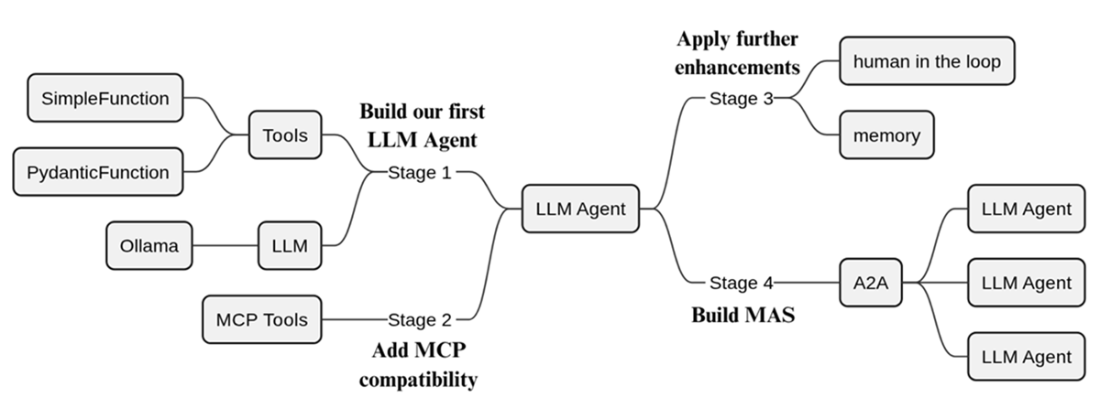

Practical applications span report generation, web and deep research, agentic RAG over private knowledge, coding support, and computer-use automation—often benefiting from MAS when complex tasks can be decomposed into focused subtasks. Finally, the chapter outlines the book’s roadmap for building a complete framework from scratch: start with core interfaces for tools and LLMs and an agent with a processing loop; add MCP compatibility; incorporate memory and human-in-the-loop patterns; and conclude with A2A-based multi-agent coordination and capstone projects that demonstrate end-to-end agentic systems in realistic workflows.

The applications for LLM agents are many, including agentic RAG, report generation, deep search and computer use, all of which can benefit from MAS.

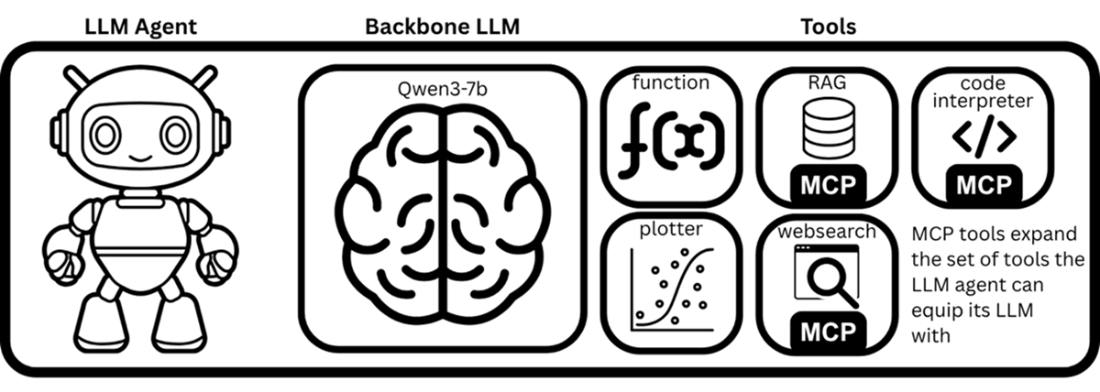

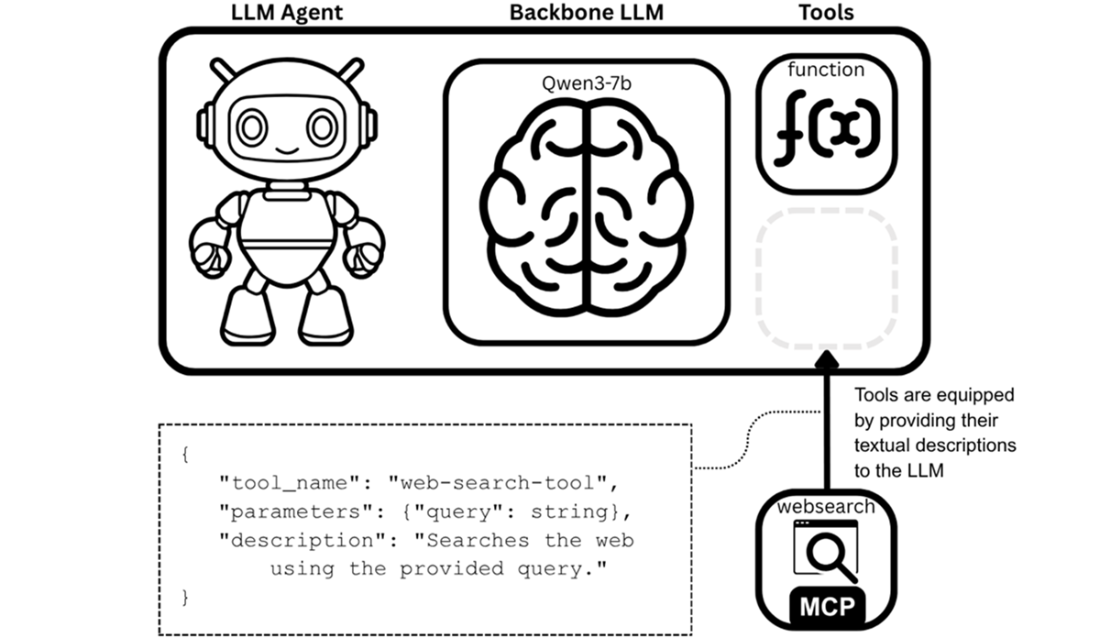

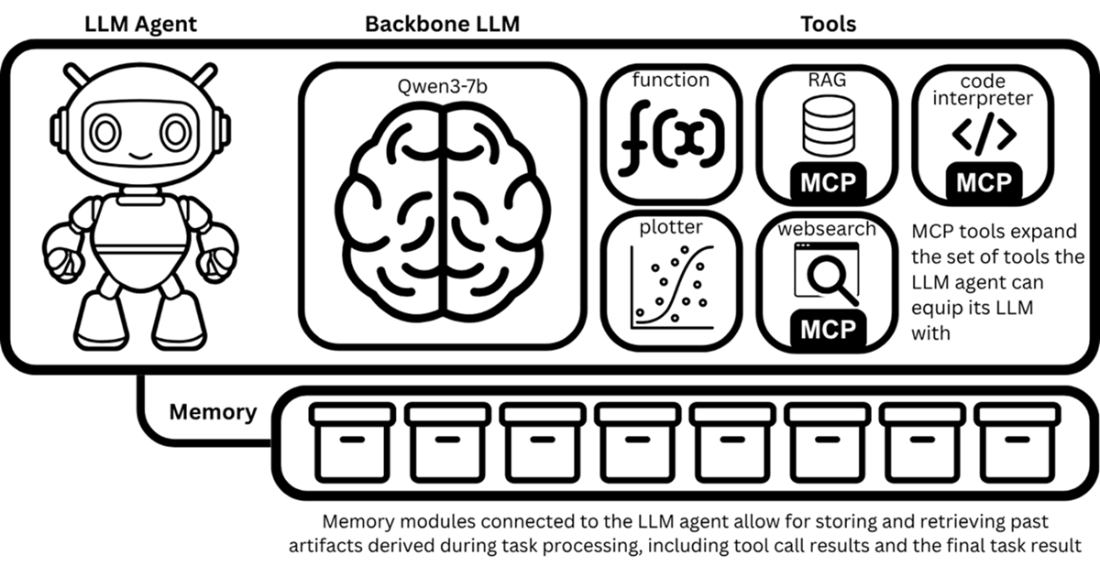

An LLM agent is comprised of a backbone LLM and its equipped tools.

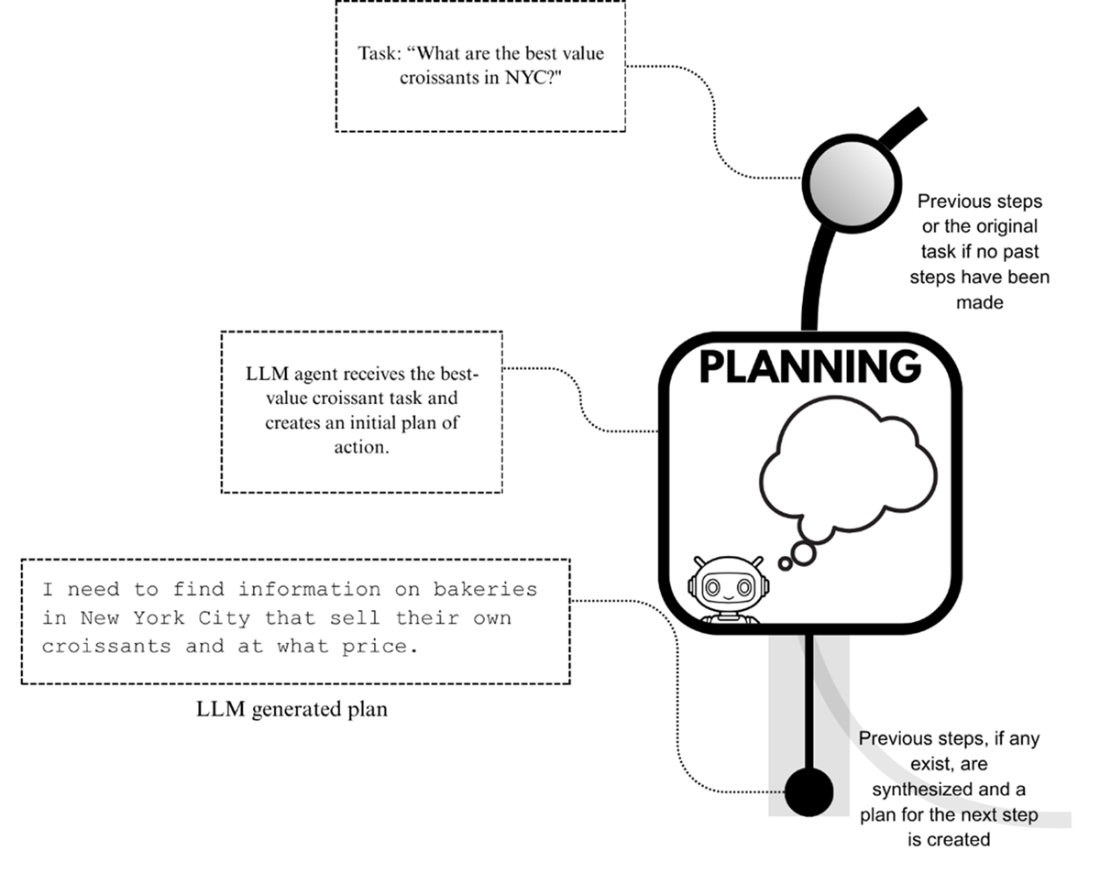

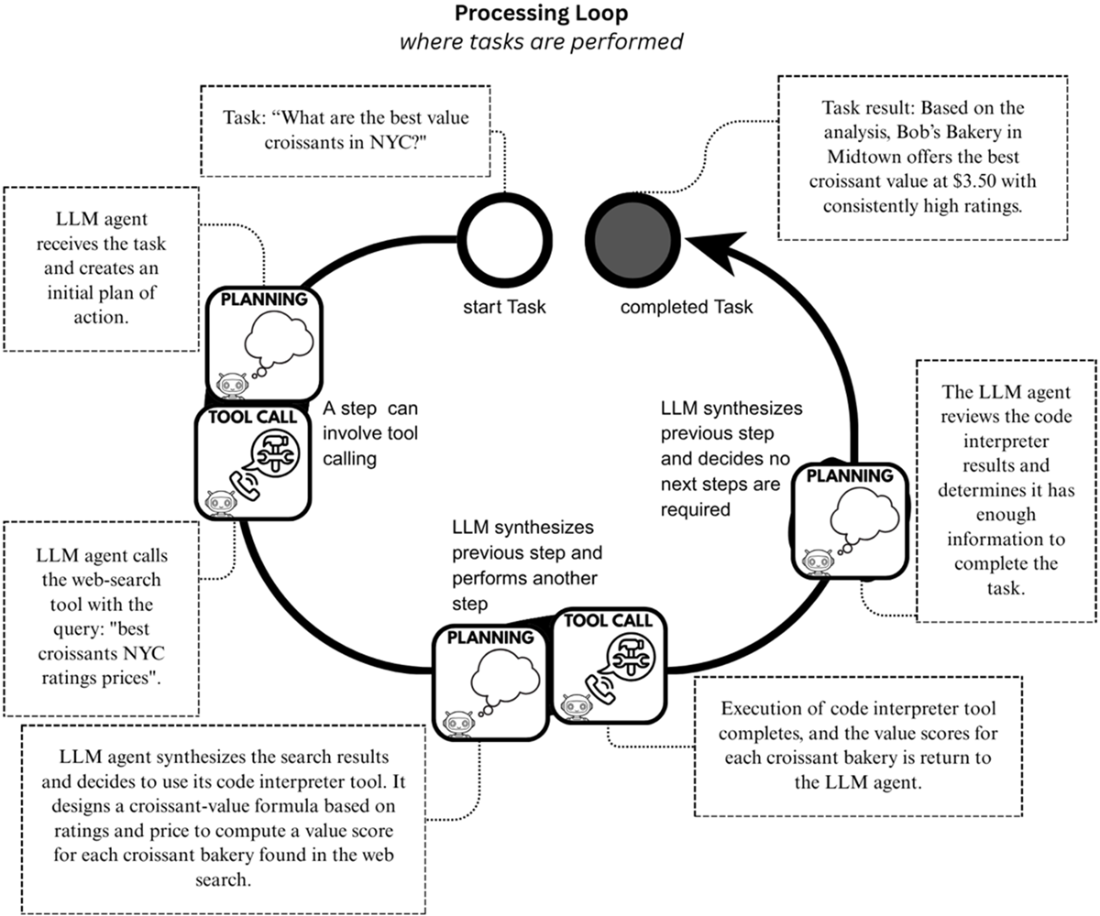

LLM agents utilize the planning capability of backbone LLMs to formulate initial plans for tasks, as well as to adapt current plans based on the results of past steps or actions taken towards task completion.

An illustration of the tool-equipping process, where a textual description of the tool that contains the tool’s name, description and its parameters is provided to the LLM agent.

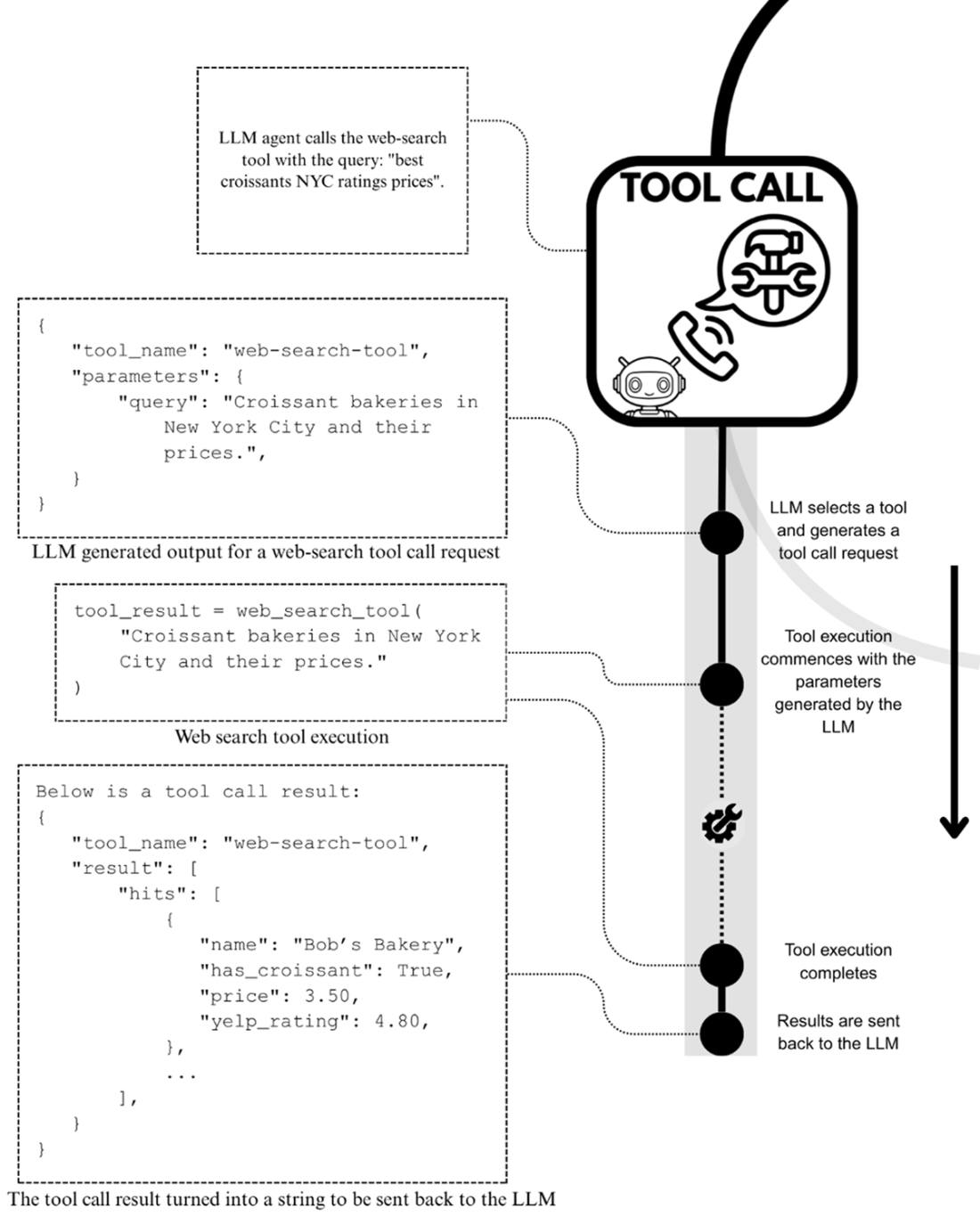

The tool-calling process, where any equipped tool can be used.

A mental model of an LLM agent performing a task through its processing loop, where tool calling and planning are used repeatedly. The task is executed through a series of sub-steps, a typical approach for performing tasks.

An LLM agent that has access to memory modules where it can store key information of task executions and load this back into its context for future tasks.

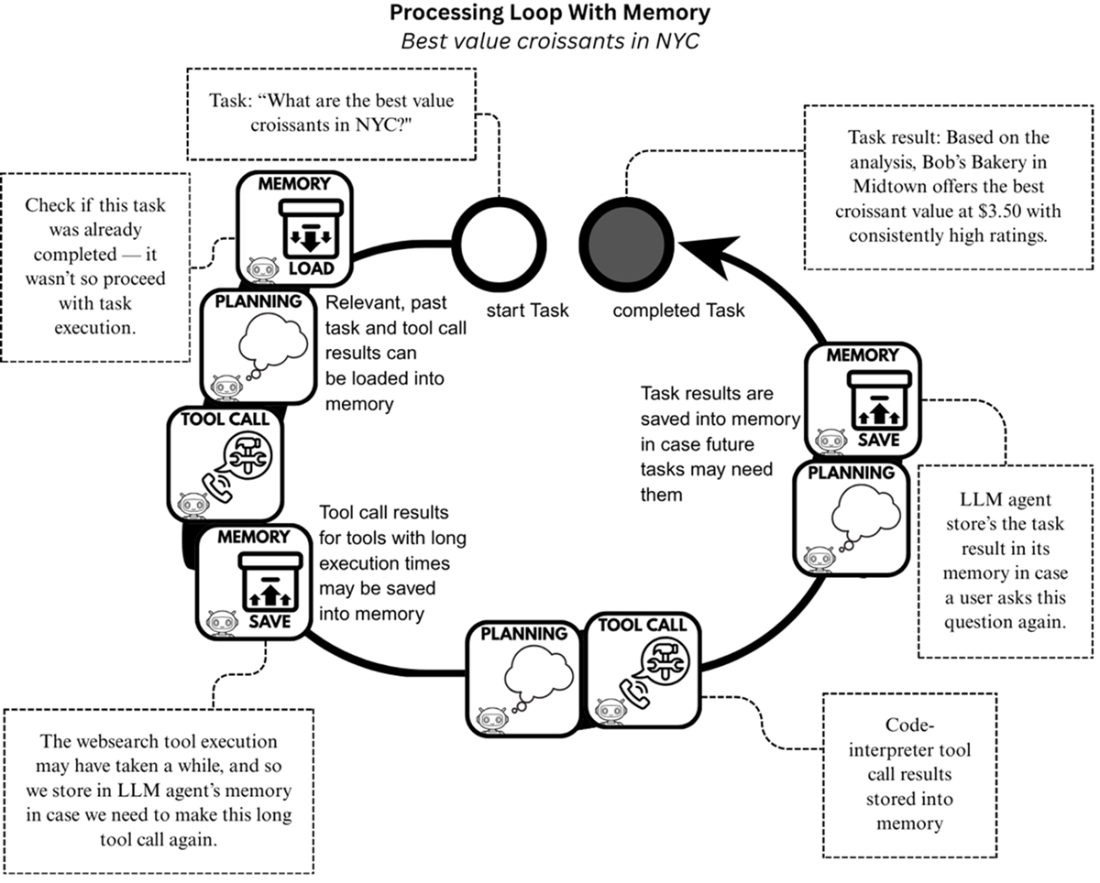

A mental model of the LLM agent processing loop that has memory modules for saving and loading important information obtained during task execution.

An LLM agent processing loop with access to human operators. The processing loop is effectively paused each time a human operator is required to provide input.

Multiple LLM agents collaborating to complete an overarching task. The outcomes of each LLM agent’s processing loop are combined to form the overall task result.

A first look at the llm-agents-from-scratch framework that we’ll build together.

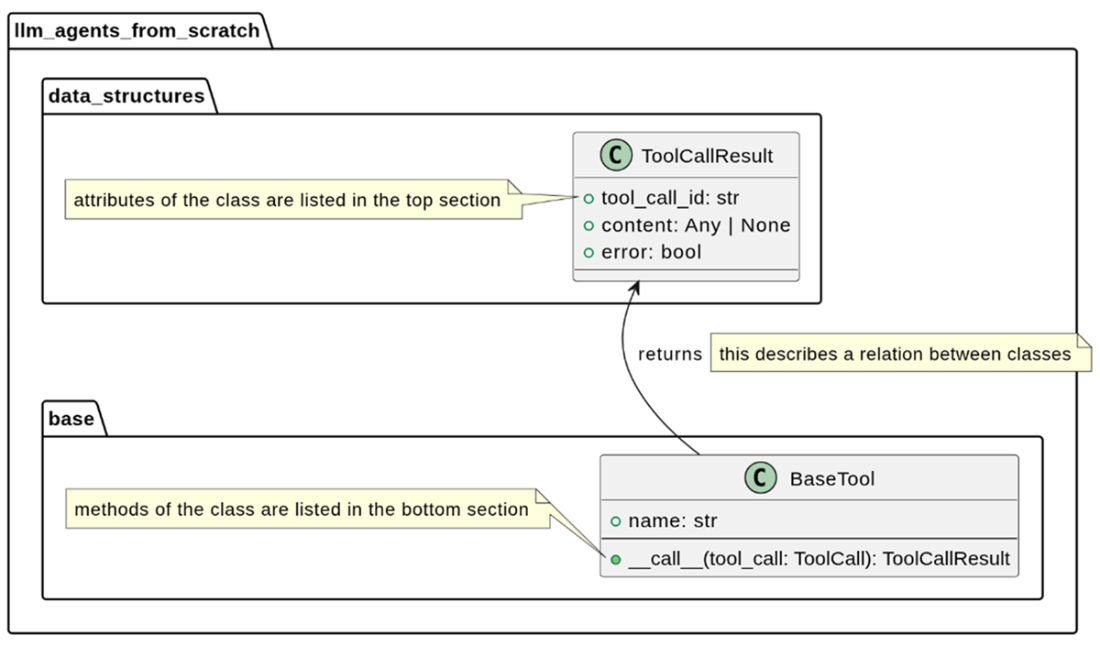

A simple UML class diagram that shows two classes from the llm-agents-from-scratch framework. The BaseTool class lives in the base module, while the ToolCallResult lives in the data_structures module. The attributes and methods of both classes are indicated in their respective class diagrams and the relation between them is also described.

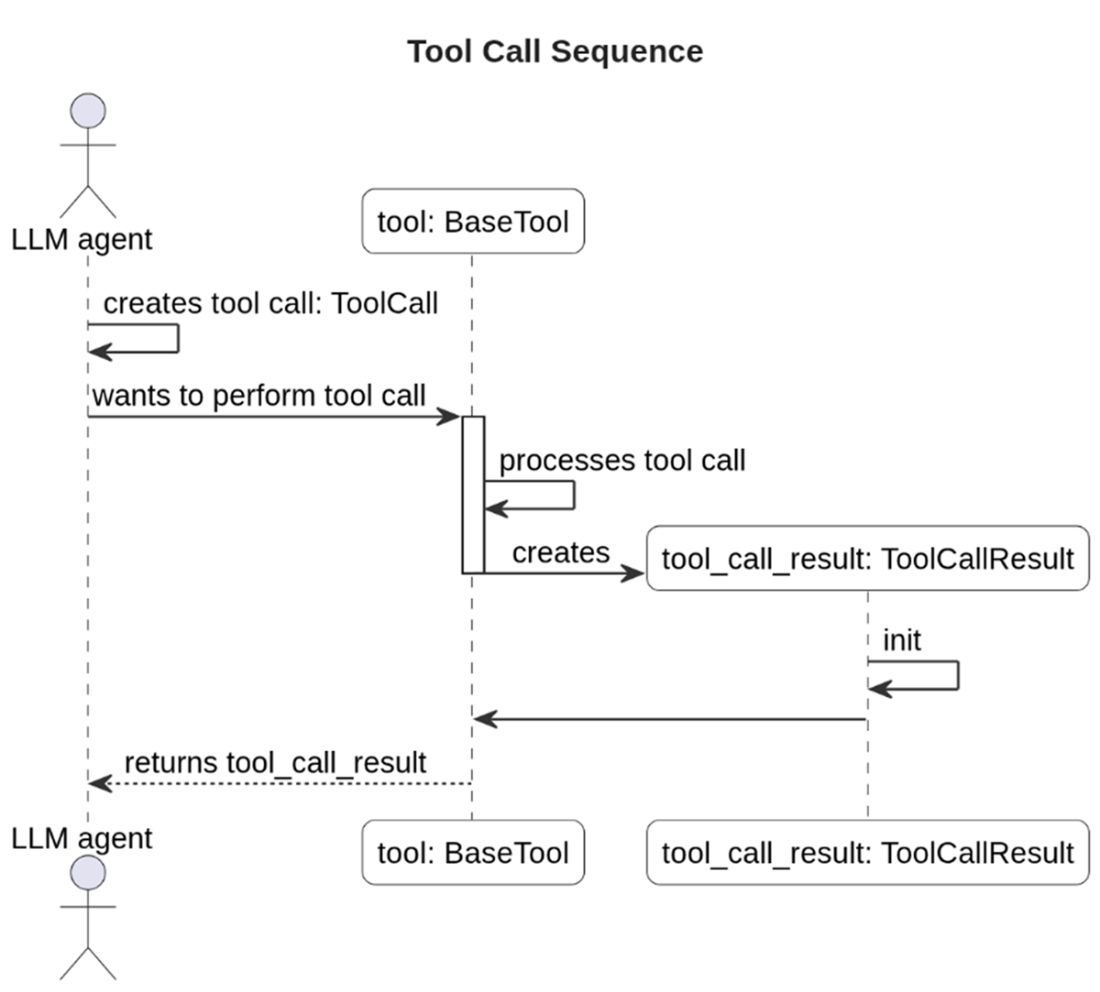

A UML sequence diagram that illustrates how the flow of a tool call. First, an LLM agent prepares a ToolCall object and invokes the BaseTool, which initiates the processing of the tool call. Once completed, the BaseTool class constructs a ToolCallResult which then gets sent back to the LLM agent.

The build plan for our llm-agents-from-scratch framework. We will build this framework in four stages. In the first stage, we’ll implement the interfaces for tools and LLMs, as well as our LLM agent class. In the second stage, we’ll make our LLM agent MCP compatible so that MCP tools can be equipped to the backbone LLM. In stage three, we will implement the human-in-the-loop pattern and add memory modules to our LLM agent. And, in the fourth and final stage, we’ll incorporate A2A and other multi-agent coordination logic into our framework to enable building MAS.

Summary

- LLMs have become very powerful text generators that have been applied successfully to tasks like text summarization, question-answering, and text classification, but they have a critical limitation in that they cannot act; they can only express an intent to act (such as making a tool call) through text. That’s where LLM agents come in to bring in the ability to carry out the intended actions.

- Applications for LLM agents are many, such as report generation, deep research, computer use and coding.

- With MAS, individual LLM agents collaborate to collectively perform tasks.

- Many applications for LLM agents can further benefit from MAS. In principle, MAS excel when complex tasks can be decomposed into smaller subtasks, where specialized LLM agents outperform general-purpose LLM agents.

- LLM agents are systems comprised of an LLM and tools that can act autonomously to perform tasks.

- LLM agents use a processing loop to execute tasks. Tool calling and planning capabilities are key components of that processing loop.

- Protocols like MCP and A2A have helped to create a vibrant LLM agent ecosystem that is powering the growth of LLM agents and their applications. MCP is a protocol developed by Anthropic that has paved the way for LLM agents to use third-party provided tools.

- A2A is a protocol developed by Google to standardize how agent-to-agent interactions are conducted in MAS.

- Building an LLM agent requires infrastructure elements like interfaces for LLMs, tools, and tasks.

- We’ll build LLM agents, MAS, and all the required infrastructure from scratch into a Python framework called llm-agents-from-scratch.

Build a Multi-Agent System (from Scratch) ebook for free

Build a Multi-Agent System (from Scratch) ebook for free