1 Meet Apache Airflow

Modern organizations rely on timely, high‑quality data, and the chapter introduces Apache Airflow as an orchestration system that coordinates the many steps involved in producing it. It frames data pipelines as workflows composed of dependent tasks and explains why representing them as directed acyclic graphs helps enforce order, avoid circular dependencies, and reason about execution. The authors set expectations for a practical, example‑driven journey: you’ll learn how to model pipelines, understand where Airflow fits among workflow tools, assess whether it suits your needs, and take your first steps building reliable data workflows.

The chapter contrasts graph‑based pipelines with simple sequential scripts, showing advantages such as parallelism, clearer dependency management, and targeted reruns when tasks fail. It surveys the workflow management landscape and then focuses on Airflow’s approach: defining pipelines as code (primarily Python), enabling dynamic task/DAG generation, and integrating broadly via providers to databases, big‑data systems, and cloud services. You learn how scheduling works (including cron‑like expressions), how core components collaborate (DAG processor, scheduler, workers, triggerer, API server), and how the web UI supports monitoring, debugging, retries, and easy reruns. A key scheduling concept is running incrementally over time intervals and backfilling past periods to build or recompute datasets efficiently.

Guidance is provided on when Airflow is a great fit—batch or event‑triggered recurring workflows, time‑bucketed data processing, rich integrations, and teams that treat pipelines as software—and when it isn’t, such as real‑time streaming needs or teams that prefer low‑/no‑code tools. The chapter notes Airflow’s open‑source maturity, enterprise‑scale track record, and availability of managed offerings, while acknowledging gaps like built‑in lineage or versioning that may require complementary tools. It closes with a roadmap for the book: foundational concepts for building DAGs, deeper topics like dynamic DAGs, custom operators, and containerized tasks, and finally deployment, monitoring, security, and cloud architectures, along with the expected technical prerequisites.

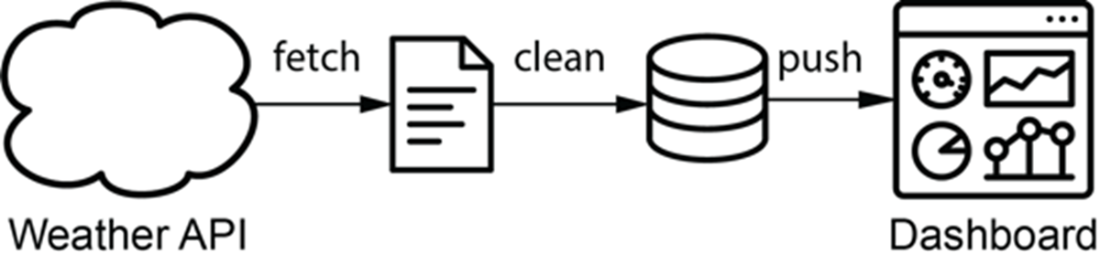

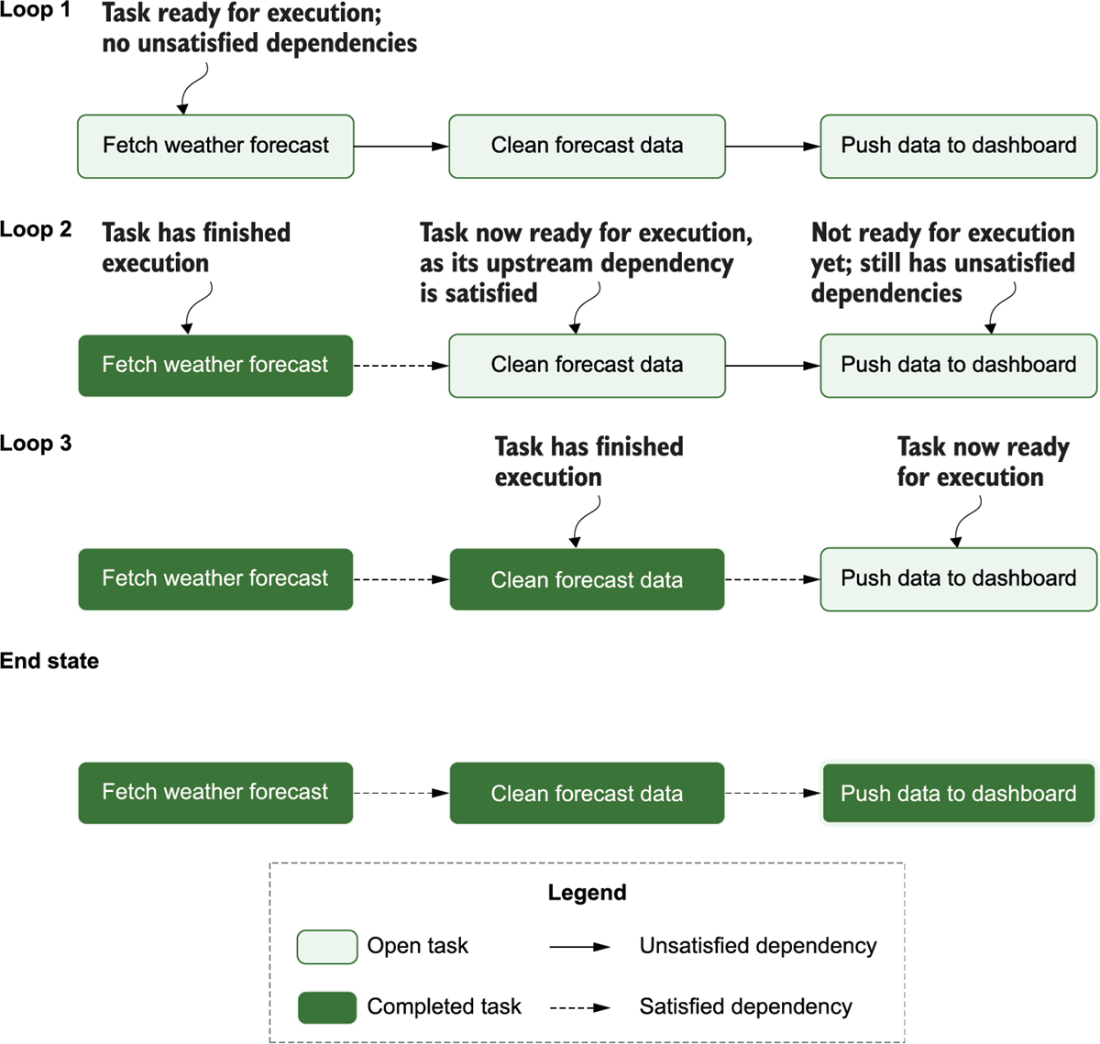

For this weather dashboard, weather data is fetched from an external API and fed into a dynamic dashboard.

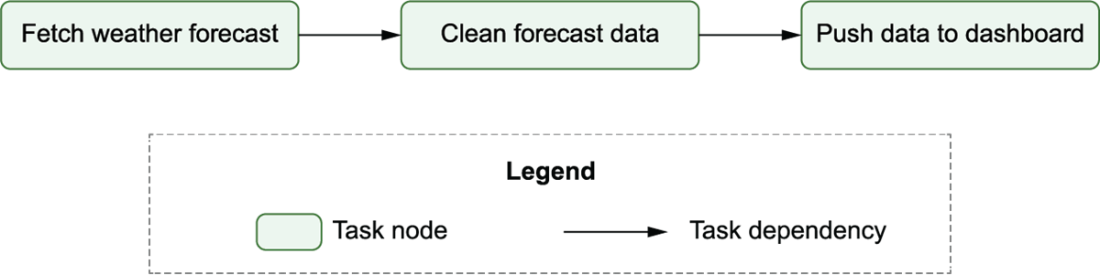

Graph representation of the data pipeline for the weather dashboard. Nodes represent tasks and directed edges represent dependencies between tasks (with an edge pointing from task 1 to task 2, indicating that task 1 needs to be run before task 2).

Cycles in graphs prevent task execution due to circular dependencies. In acyclic graphs (top), there is a clear path to execute the three different tasks. However, in cyclic graphs (bottom), there is no longer a clear execution path due to the interdependency between tasks 2 and 3.

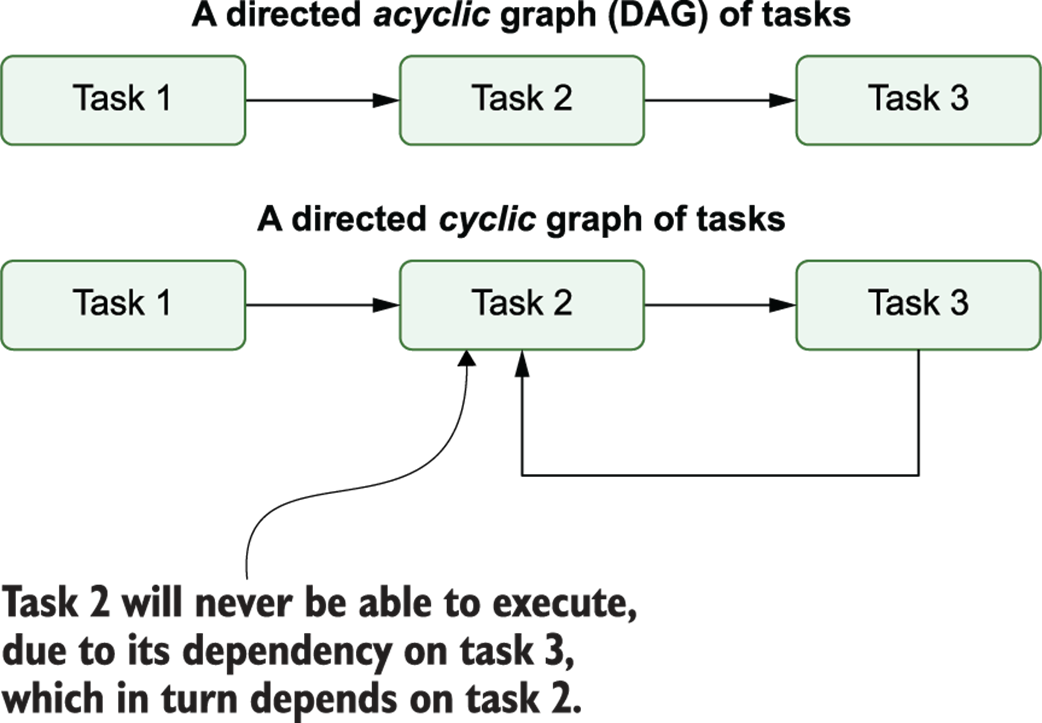

Using the DAG structure to execute tasks in the data pipeline in the correct order: depicts each task’s state during each of the loops through the algorithm, demonstrating how this leads to the completed execution of the pipeline (end state)

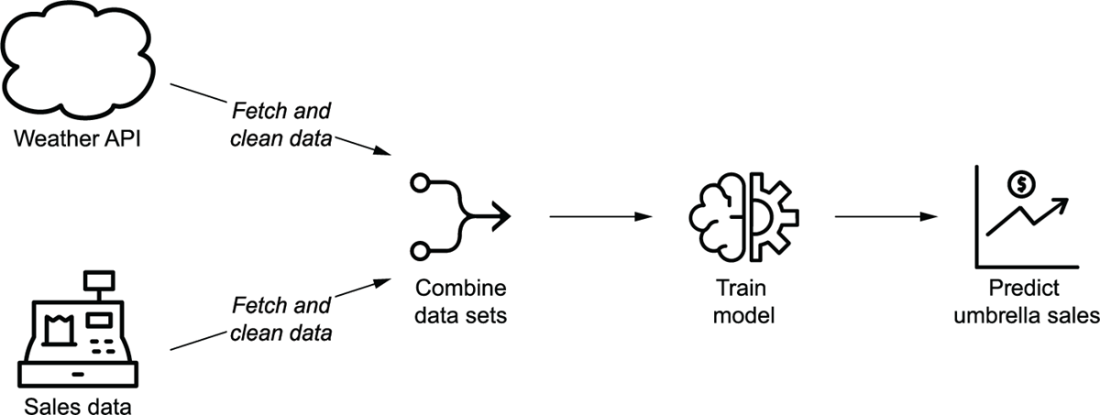

Overview of the umbrella demand use case, in which historical weather and sales data are used to train a model that predicts future sales demands depending on weather forecasts

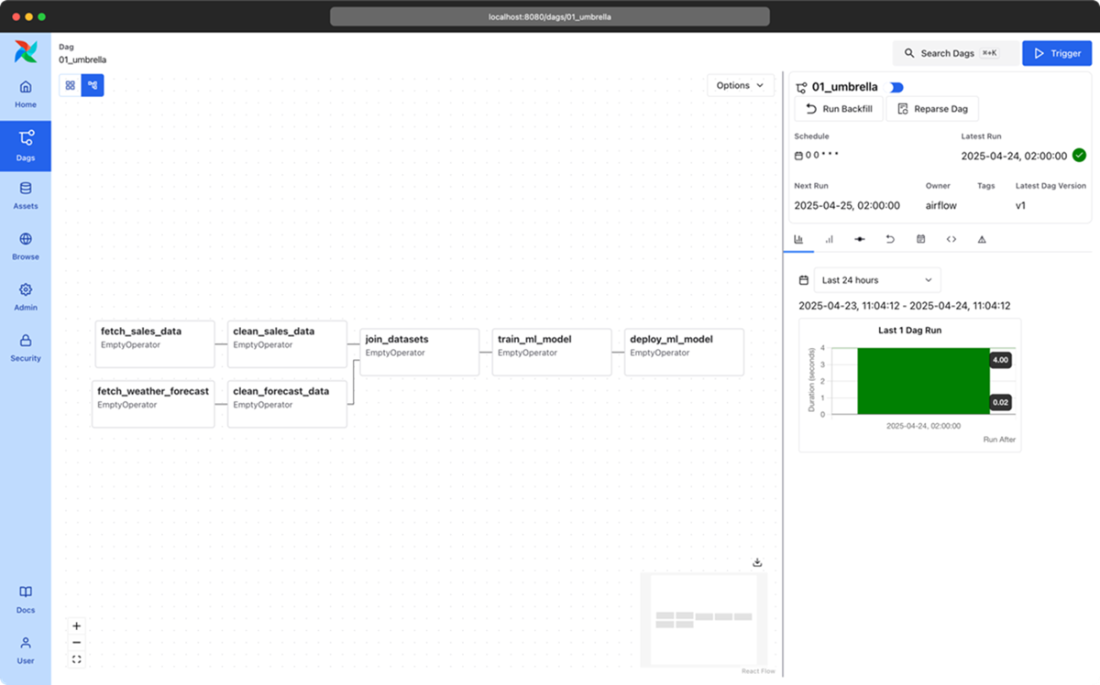

Independence between sales and weather tasks in the graph representation of the data pipeline for the umbrella demand forecast model. The two sets of fetch/cleaning tasks are independent as they involve two different data sets (the weather and sales data sets). This independence is indicated by the lack of edges between the two sets of tasks.

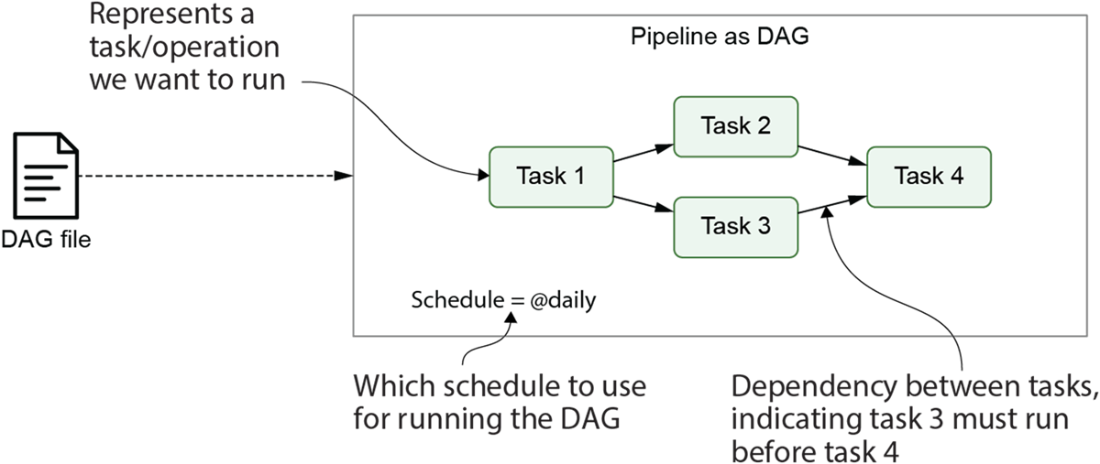

Airflow pipelines are defined as DAGs using Python code in DAG files. Each DAG file typically defines one DAG, which describes the different tasks and their dependencies. Besides this, the DAG also defines a schedule interval that determines when the DAG is executed by Airflow.

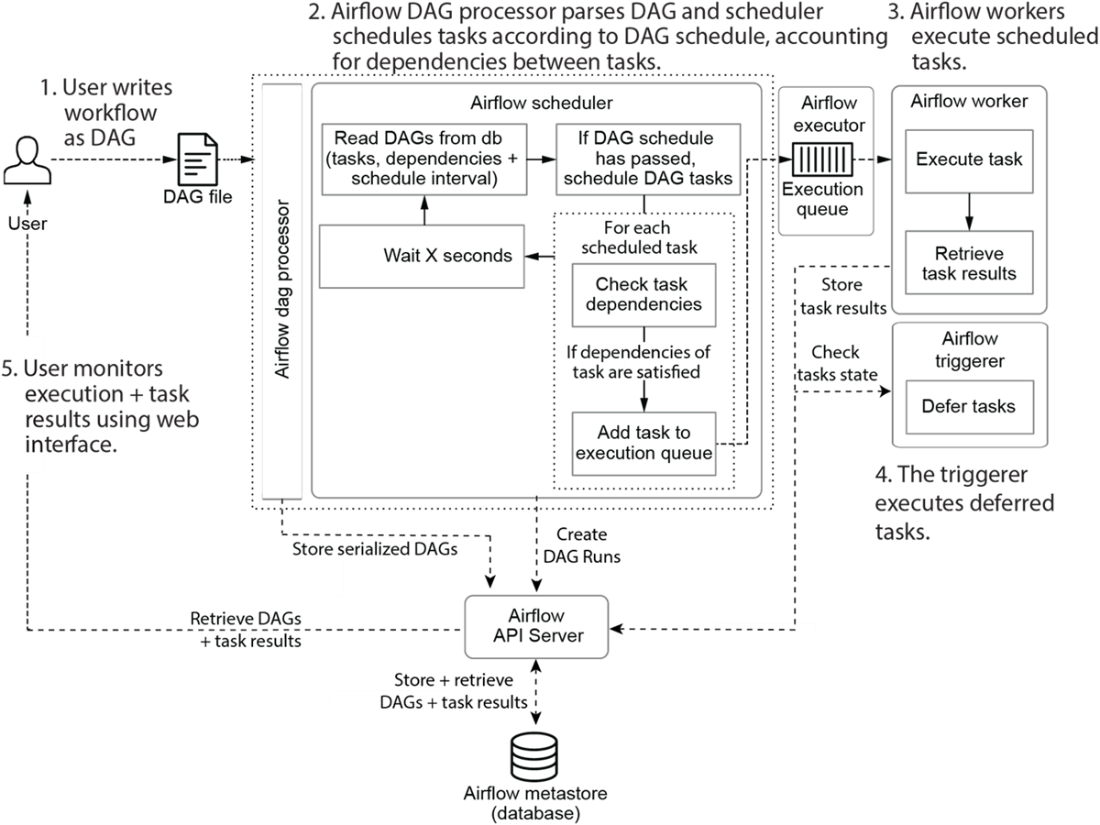

The main components involved in Airflow are the Airflow API server, scheduler, DAG processor, triggerer and workers.

Developing and executing pipelines as DAGs using Airflow. Once the user has written the DAG, the DAG Processor and scheduler ensure that the DAG is run at the right moment. The user can monitor progress and output while the DAG is running at all times.

The login page for the Airflow web interface. In the code examples accompanying this book, a default user “airflow” is provided with the password “airflow”.

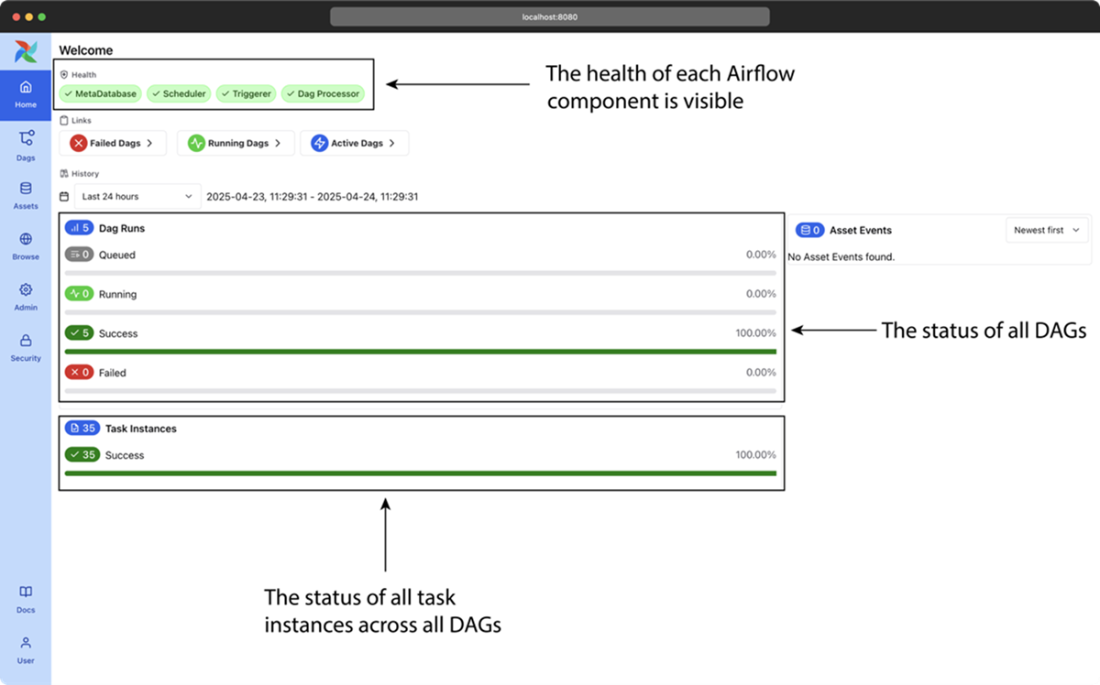

The main page of Airflow’s web interface, showing a high-level overview of all DAGs and their recent results.

The DAGs page of Airflow’s web interface, showing a high-level overview of all DAGs and their recent results.

The graph view in Airflow’s web interface, showing an overview of the tasks in an individual DAG and the dependencies between these tasks

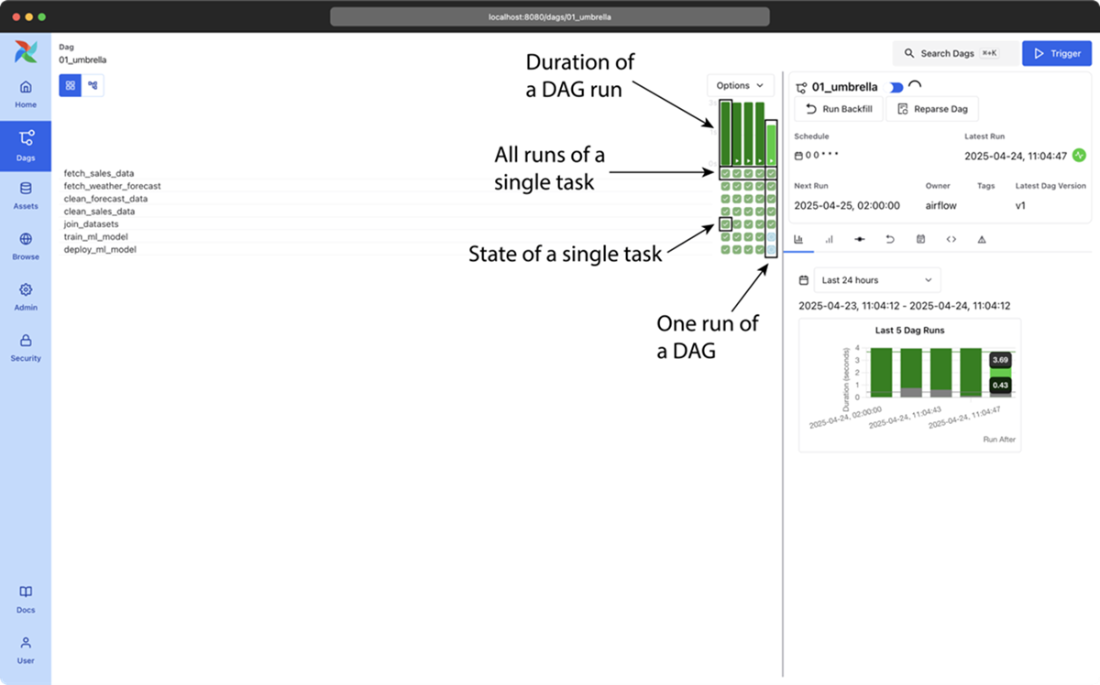

Airflow’s grid view, showing the results of multiple runs of the umbrella sales model DAG (most recent + historical runs). The columns show the status of one execution of the DAG and the rows show the status of all executions of a single task. Colors (which you can see in the e-book version) indicate the result of the corresponding task. Users can also click on the task “squares” for more details about a given task instance, or to manage the state of a task so that it can be rerun by Airflow, if desired.x

Summary

- Directed Acyclic Graphs (DAGs) are a visual tool used to represent data workflows in data processing pipelines. A node in a DAG denote the task to be performed, and edges define the dependencies between them. This is not only visually more understandable but also aids in better representation, easier debugging + rerunning, and making use of parallelism compared to single monolithic scripts.

- In Airflow, DAGs are defined using Python files. Airflow 3.0 introduced the option of using other languages. In this book we will focus on Python. These scripts outline the order of task execution and their interdependencies. Airflow parses these files to construct and understand the DAG's structure, enabling task orchestration and scheduling.

- Although many workflow managers have been developed over the years for executing graphs of tasks, Airflow has several key features that makes it uniquely suited for implementing efficient, batch-oriented data pipelines.

- Airflow excels as a workflow orchestration tool due to its intuitive design, scheduling capabilities, and extensible framework. It provides a rich user interface for monitoring and managing tasks in data processing workflows.

- Airflow is comprised of five key components:

- DAG Processor: Reads and parses the DAGs and stores the resulting serialized version of these DAGs in the Metastore for use by (among others) the scheduler

- Scheduler: Reads the DAGs parsed by the DAG Processor, determines if their schedule intervals have elapsed, and queues their tasks for execution.

- Worker: Execute the tasks assigned to them by the scheduler.

- Triggerer: It handles the execution of deferred tasks, which are waiting for external events or conditions.

- API Server: Among other things, presents a user interface for visualizing and monitoring the DAGs and their execution status. The API Server also acts as the interface between all Airflow components

- Airflow enables the setting of a schedule for each DAG, specifying when the pipeline should be executed. In addition, Airflow’s built-in mechanisms are able to manage task failures, automatically.

- Airflow is well-suited for batch-oriented data pipelines, offering sophisticated scheduling options that enable regular, incremental data processing jobs. On the other hand, Airflow is not the right choice for streaming workloads or for implementing highly dynamic pipelines where DAG structure changes from one day to the other.

FAQ

What is a data pipeline in the context of Airflow?

A data pipeline is a sequence of tasks that produce a result, such as ingesting, transforming, and loading data. In Airflow, pipelines are modeled as directed acyclic graphs (DAGs) where nodes are tasks and directed edges define task dependencies and order of execution.Why represent pipelines as DAGs instead of writing a single sequential script?

DAGs make dependencies explicit, enable parallel execution of independent branches, and allow rerunning only the failed tasks (and their downstream dependents) instead of the entire script. This improves clarity, performance, and recovery from failures.What does “acyclic” mean in a DAG, and why is it important?

Acyclic means the graph has no cycles. Without cycles, there are no circular dependencies (A depends on B while B depends on A), which would otherwise deadlock execution. The acyclic property ensures there is a valid order to run tasks.How does Airflow determine the order in which tasks run?

Airflow follows the DAG’s dependencies: it queues tasks whose upstream dependencies are complete. The scheduler repeatedly checks dependencies, adds ready tasks to a queue, and workers execute them until all tasks finish.What are Airflow’s main components and their roles?

- DAG Processor: parses DAG files and stores metadata in the metastore (via the API server)- Scheduler: evaluates schedules and dependencies, enqueues runnable tasks

- Workers: execute tasks in parallel and report results

- Triggerer: manages async/deferred tasks that wait on external events/conditions

- API Server: provides the web UI and API, and mediates all access to the metastore

How are pipelines defined in Airflow?

Pipelines are defined as Python code in DAG files that declare tasks, dependencies, and scheduling. As of Airflow 3.0, additional languages are supported, but Python remains primary and is the language Airflow itself is written in.How does Airflow schedule and run pipelines?

You assign a schedule (e.g., hourly, daily, cron-like). Airflow tracks schedule intervals and triggers DAG runs when intervals elapse. It then resolves dependencies, queues ready tasks, and workers execute them. The approach supports time-based semantics critical for data workflows.What monitoring and failure-handling features does Airflow provide?

- Web UI with DAG, graph, and grid views for real-time and historical runs- Task logs accessible from the UI

- Automatic retries with configurable delays

- Ability to clear and rerun failed tasks (and downstream dependents)

- Health indicators for core components

What are incremental loading and backfilling in Airflow?

Airflow’s schedule intervals let you process data in discrete time windows (incremental “deltas” instead of full reloads). Backfilling allows running a DAG for past intervals to create or recompute historical results, useful after code changes or when seeding datasets.When is Airflow a good fit, and when is it not?

Good fit:- Batch or event-triggered workflows with time-based schedules

- Complex dependency graphs and parallelism needs

- Integrations across many systems via providers

- Engineering-oriented teams applying software best practices

- Use cases needing easy backfills and open-source flexibility

Not ideal:

- True real-time/streaming event processing

- Teams seeking no/low-code UIs over code-defined workflows

- Needs for built-in data lineage/versioning (require complementary tools)

Data Pipelines with Apache Airflow, Second Edition ebook for free

Data Pipelines with Apache Airflow, Second Edition ebook for free