1 Getting to know Kafka as an architect

Apache Kafka has evolved from a high‑throughput message bus into a foundational platform for real‑time, event‑driven systems. This chapter introduces why architects increasingly favor events over synchronous, point‑to‑point integrations: producers publish once, many consumers react, and systems become more autonomous and resilient. It sets expectations for an architect’s perspective—understanding how Kafka works, the tradeoffs it imposes, and the patterns and anti‑patterns that determine long‑term value—focusing on design, data modeling, schema evolution, integration strategies, and the balance between performance, ordering, and fault tolerance rather than on client API details.

The chapter outlines core concepts and components that shape architectural decisions. Kafka implements publish‑subscribe with persistent, replicated storage; producers write events, consumers pull and can replay them, and reliability is achieved through acknowledgments, retries, and fault‑tolerant clustering. Controllers using KRaft manage cluster metadata and broker health, while the commit‑log model preserves ordering per log and immutability under configurable retention. Beyond the broker, a broader ecosystem supports enterprise needs: Schema Registry formalizes data contracts and compatibility; Kafka Connect moves data between Kafka and external systems through configuration; and streaming frameworks such as Kafka Streams or Apache Flink provide stateful transformations, routing, joins, and exactly‑once processing—enabling low‑latency analytics and operational workflows at scale.

Applying Kafka in the enterprise requires clear fit and operational readiness. The chapter contrasts two common uses: durable event delivery for decoupled microservices and long‑lived logs for event‑sourcing and real‑time enrichment—while noting Kafka’s limits as a general query store compared with databases. It highlights non‑functional considerations—requirements gathering, sizing, SLAs, security, observability, testing, and disaster recovery—as well as deployment choices between on‑premises and managed cloud services, each with cost, control, and tooling implications, and acknowledges alternative streaming platforms. Overall, it frames a practical roadmap for initiatives such as customer 360 views and modernization programs, emphasizing governance, reliability, and sustainable operations as cornerstones of successful Kafka adoption.

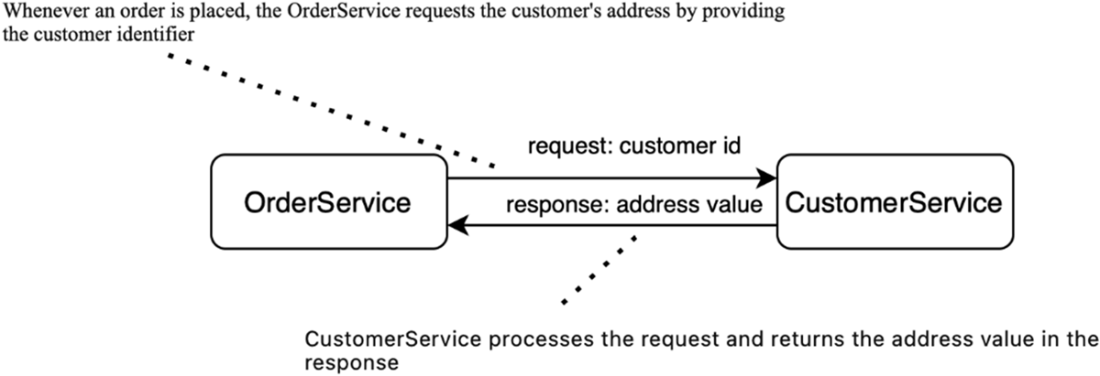

Request-response design pattern

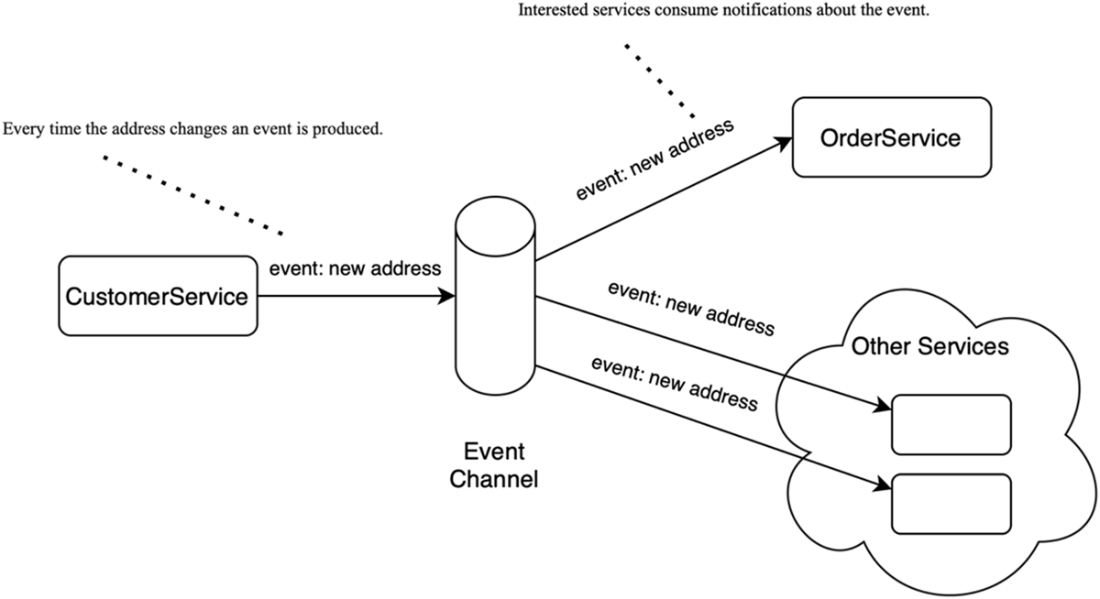

The EDA style of communication: systems communicate by publishing events that describe changes, allowing others to react asynchronously.

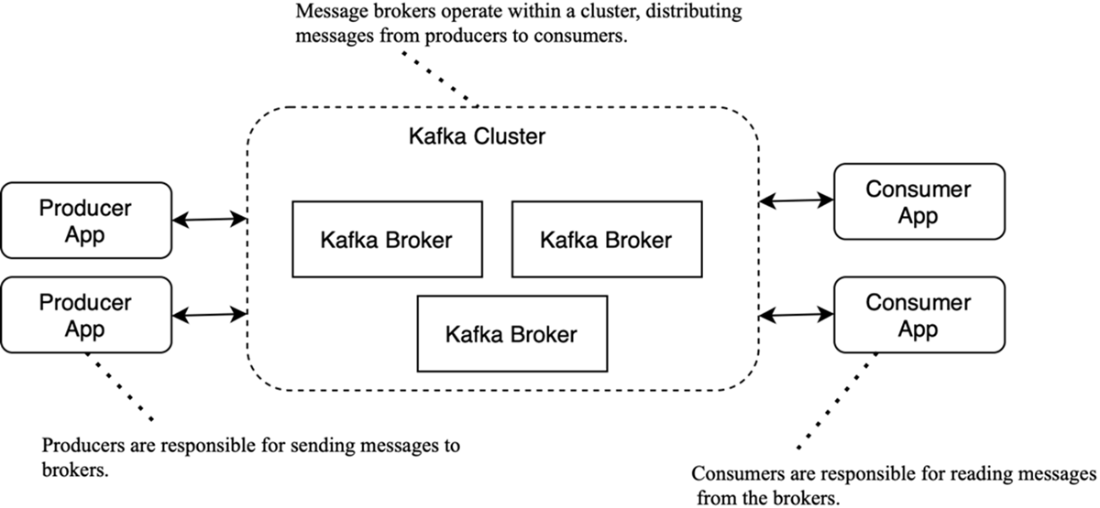

The key components in the Kafka ecosystem are producers, brokers, and consumers.

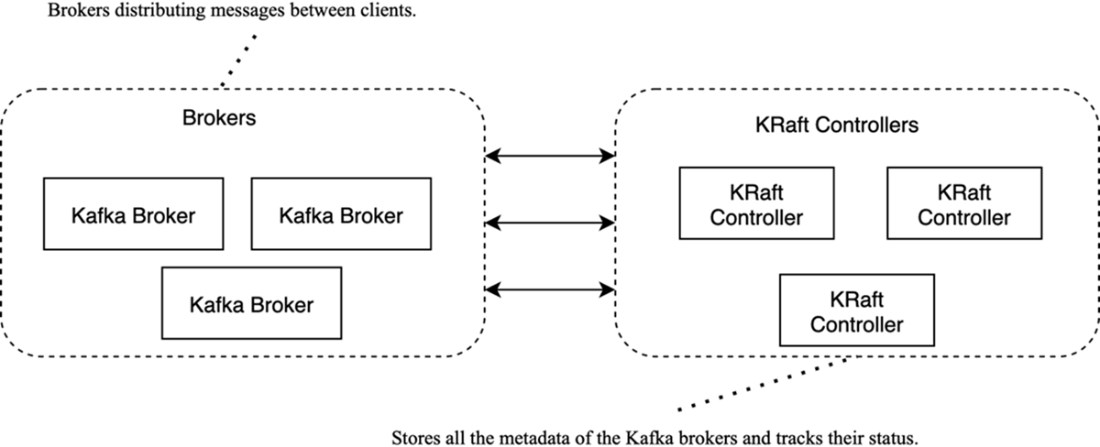

Structure of a Kafka cluster: brokers handle client traffic; KRaft controllers manage metadata and coordination

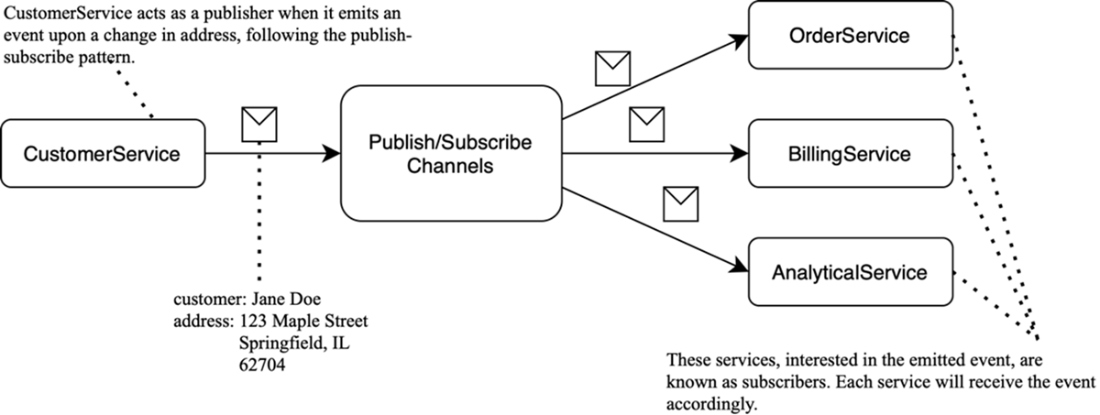

Publish-subscribe example: CustomerService publishes a “customer updated” event to a channel; all subscribers receive it independently.

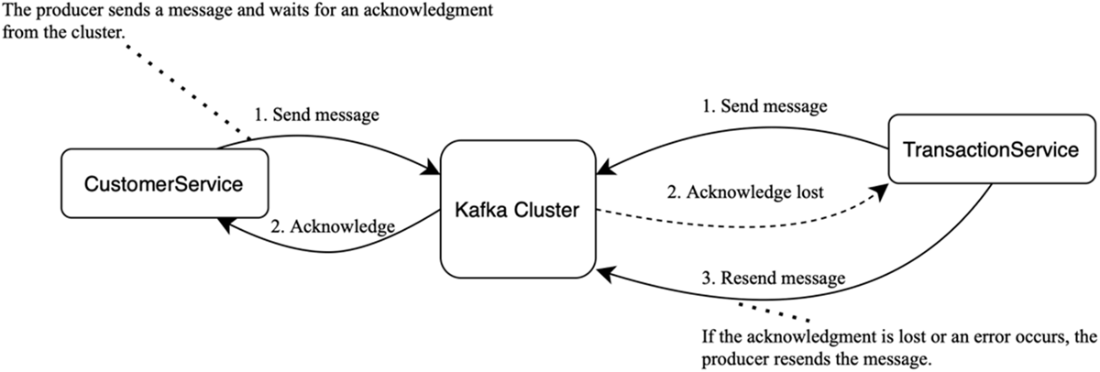

Acknowledgments: Once the cluster accepts a message, it sends an acknowledgement to the service. If no acknowledgment arrives within the timeout, the service treats the send as failed and retries.

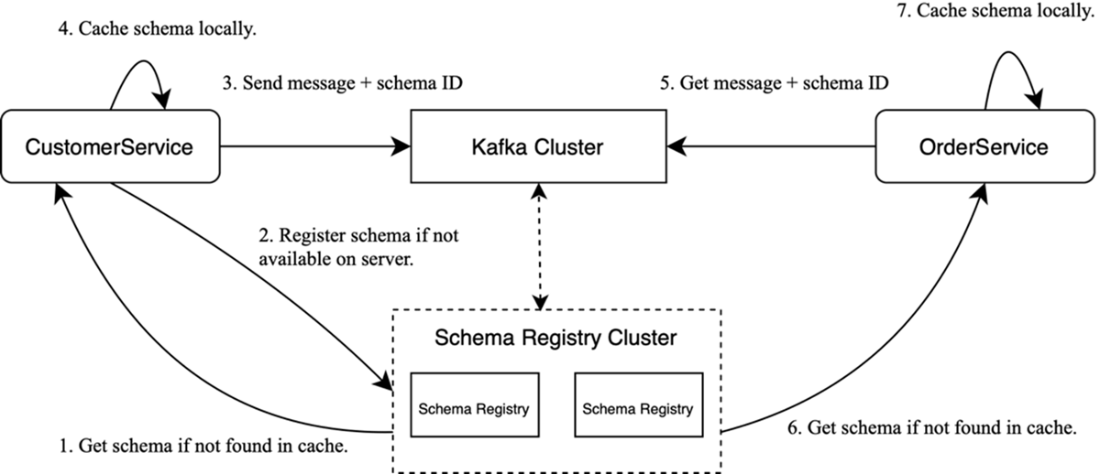

Working with Schema Registry: Schemas are managed by a separate Schema Registry cluster; messages carry only a schema ID, which clients use to fetch (and cache) the writer schema.

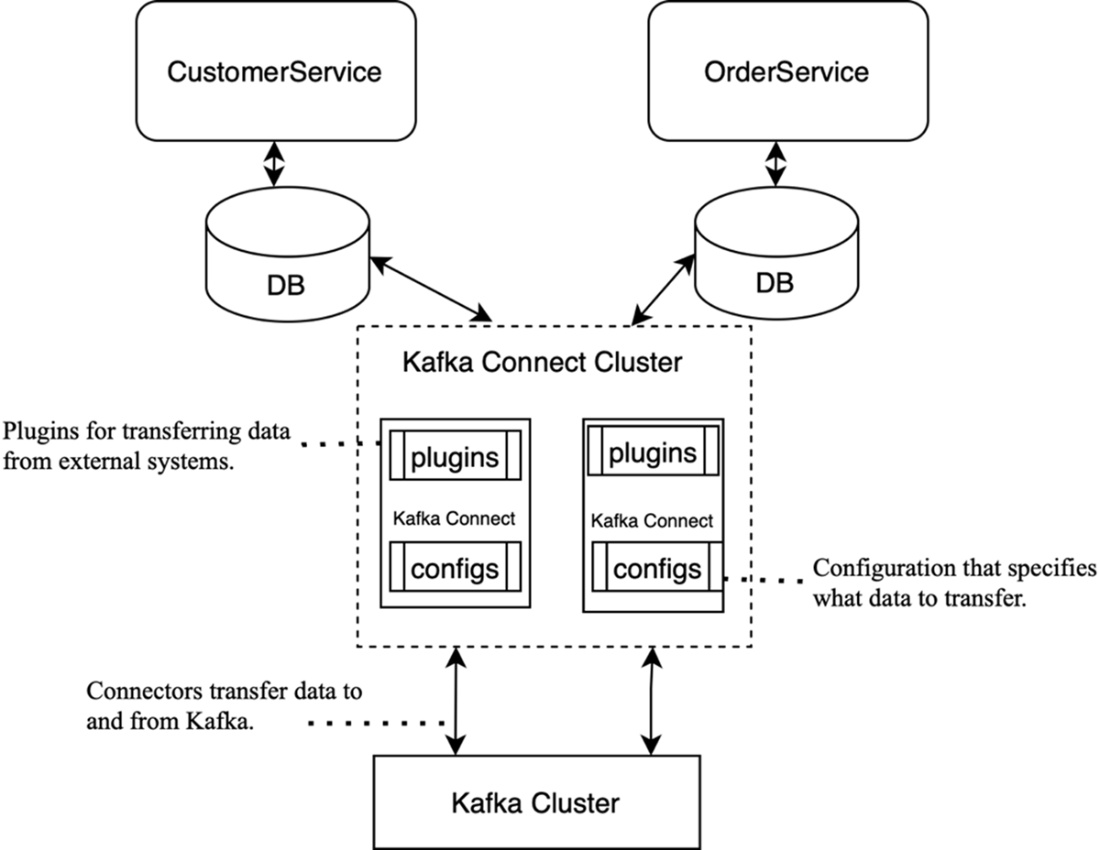

The Kafka Connect architecture: connectors integrate Kafka with external systems, moving data in and out.

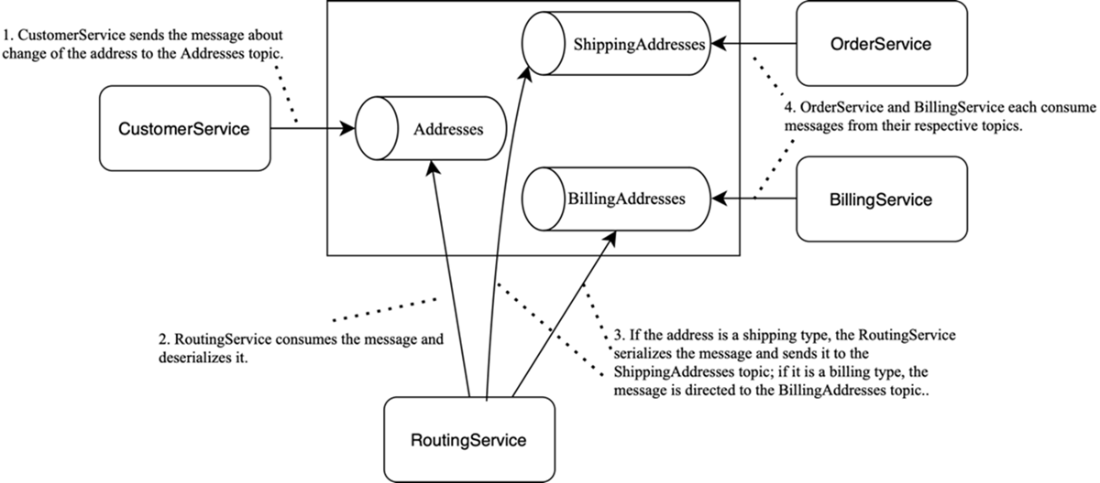

An example of a streaming application. RoutingService implements content-based routing, consuming messages from Addresses and, based on their contents (e.g., address type), publishing them to ShippingAddresses or BillingAddresses.

Summary

- There are two primary communication patterns between services: request-response and event-driven architecture.

- In the event-driven approach, services communicate by triggering events.

- The key components of the Kafka ecosystem include brokers, producers, consumers, Schema Registry, Kafka Connect, and streaming applications.

- Cluster metadata management is handled by KRaft controllers.

- Kafka is versatile and well-suited for various industries and use cases, including real-time data processing, log aggregation, and microservices communication.

- Kafka components can be deployed both on-premises and in the cloud.

- The platform supports two main use cases: message delivery and state storage.

Kafka for Architects ebook for free

Kafka for Architects ebook for free