1 Understanding foundation models

Foundation models mark a shift from building task-specific predictors to training a single, very large model on vast and diverse data so it can generalize across many tasks. They are characterized by four pillars: massive and heterogeneous training data, large parameter counts, broad task applicability, and the ability to be adapted via fine-tuning. In time series, this means one model can forecast across frequencies and temporal patterns (trends, seasonality, holiday effects) and often perform related tasks such as anomaly detection or classification. Practitioners can use them in zero-shot mode for quick, out-of-the-box forecasts, or fine-tune selected parameters to align the model to a specific domain and improve accuracy.

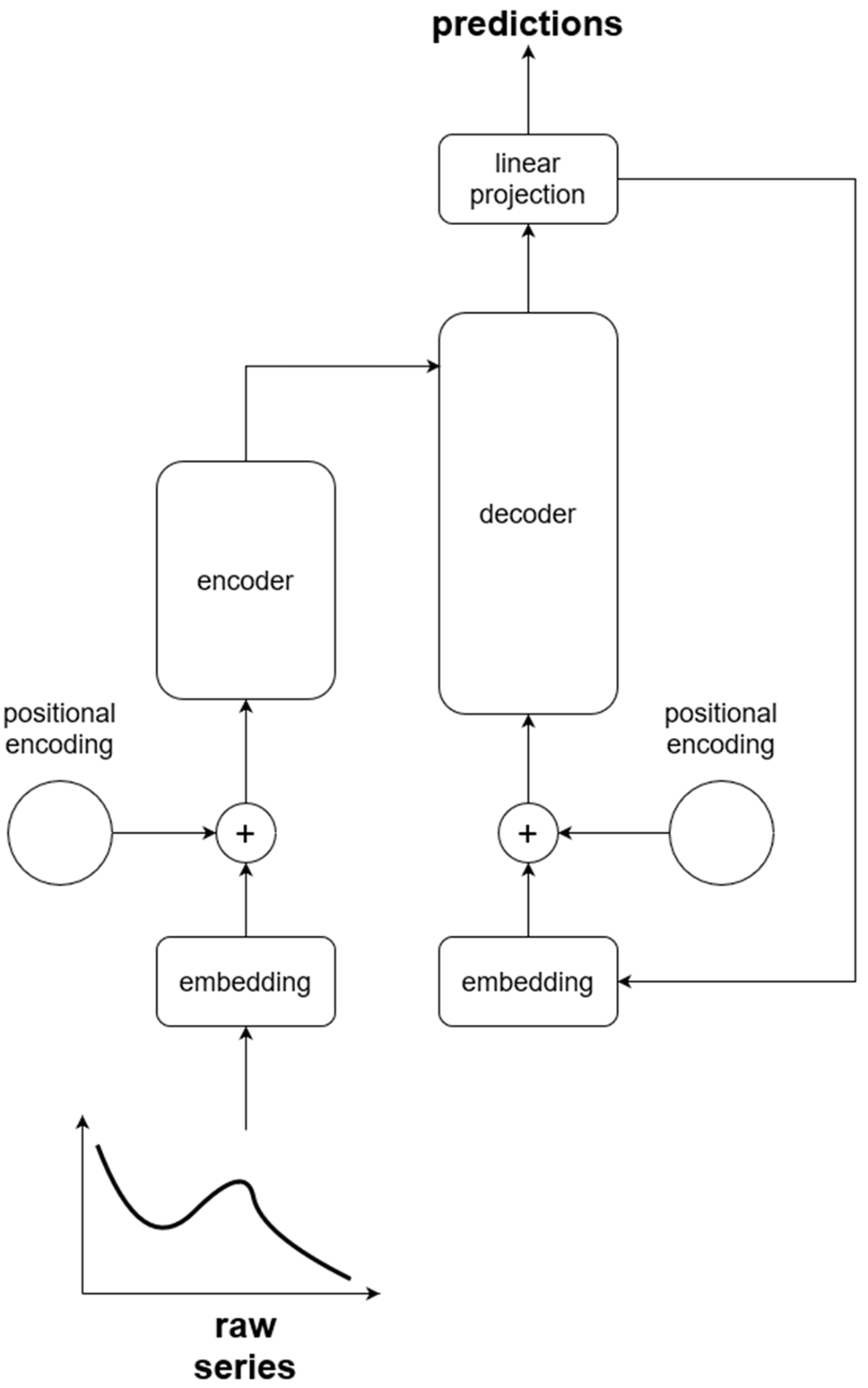

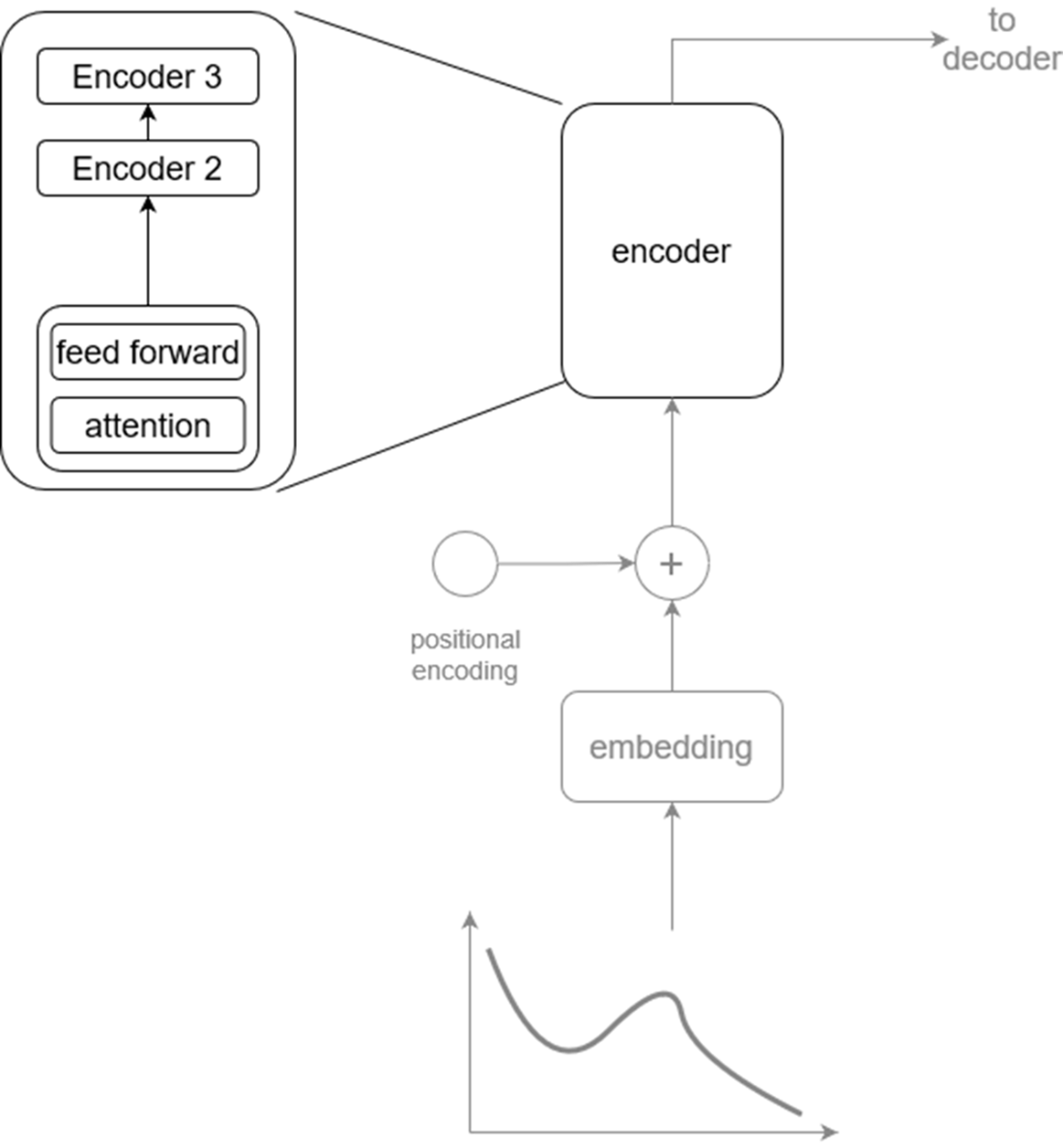

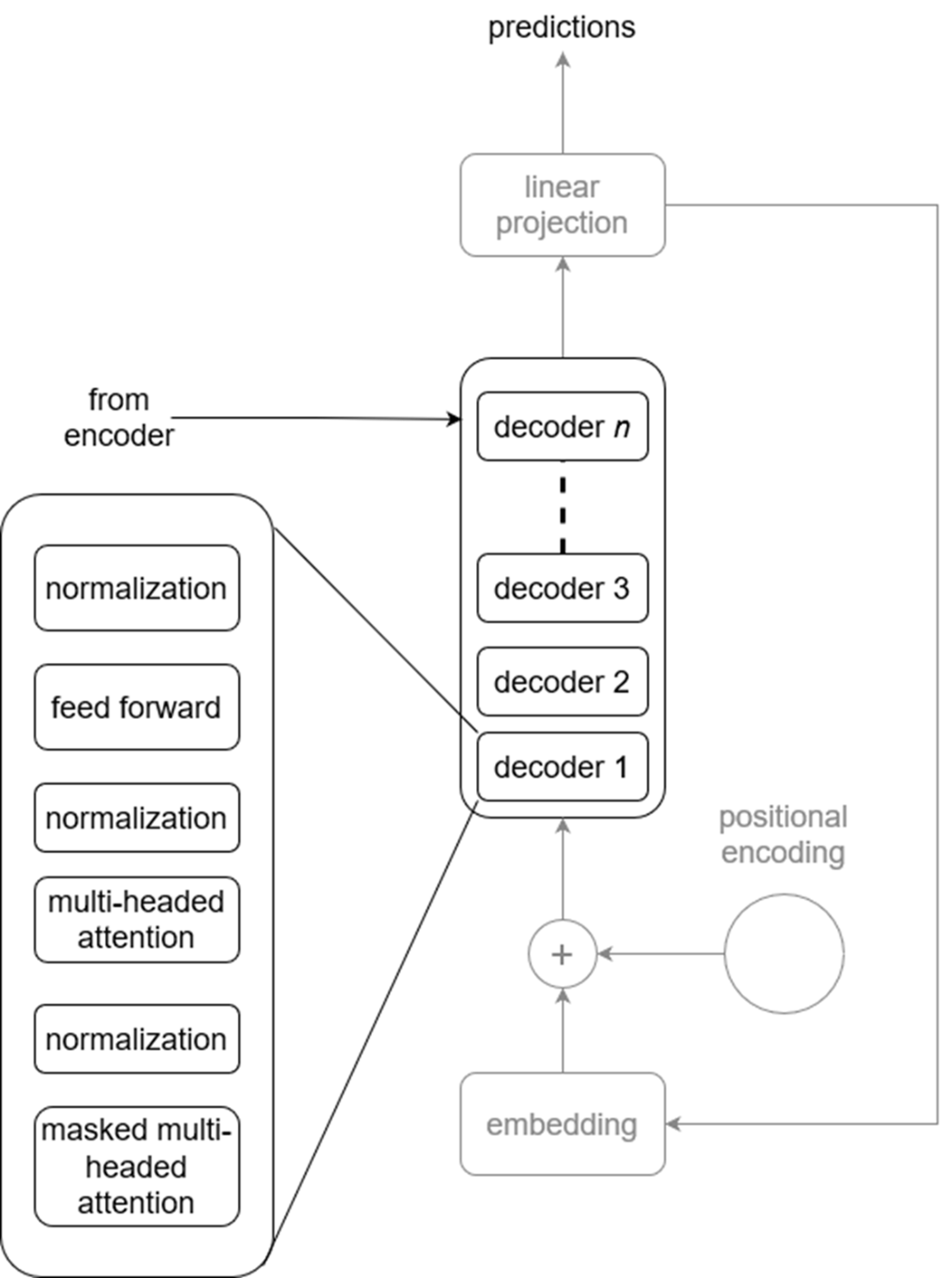

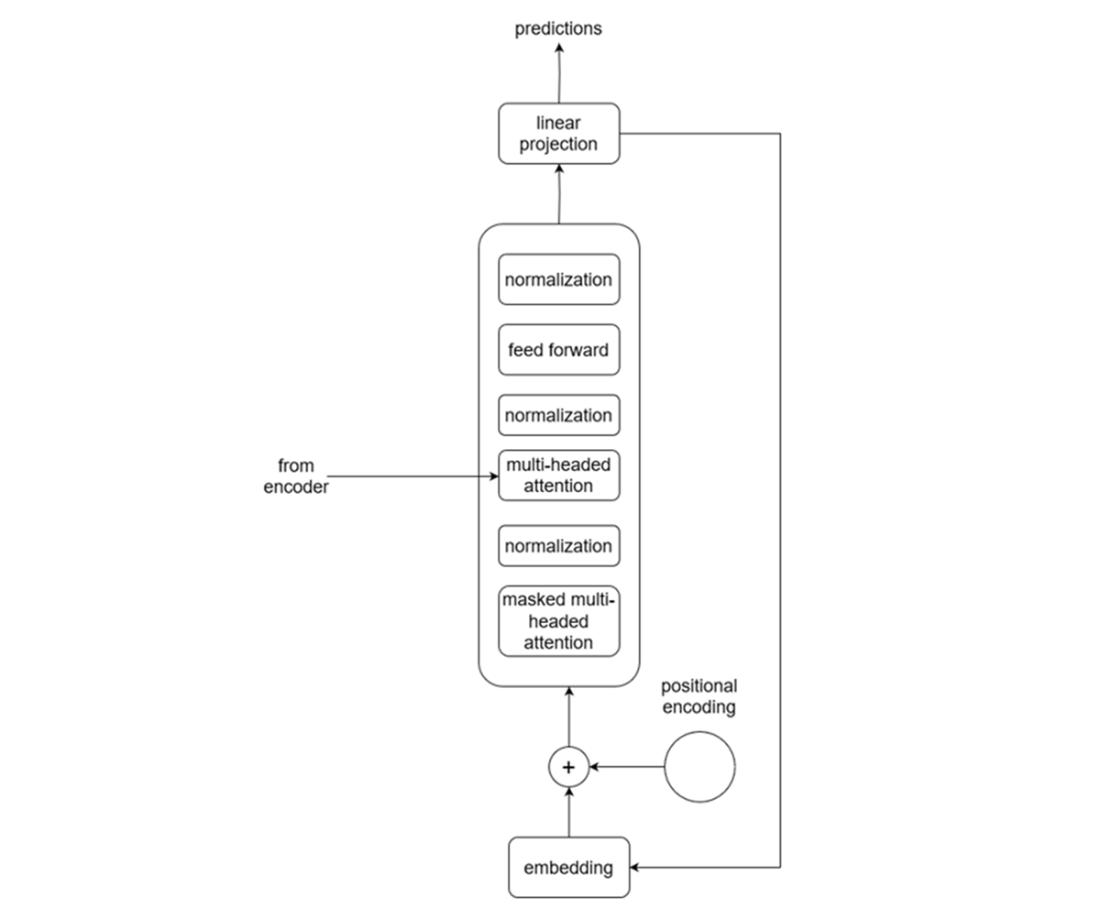

Most foundation models build on the Transformer architecture, whose mechanics are adapted for sequences like time series. Raw signals are transformed into embeddings and augmented with positional encoding to preserve ordering, then passed through an encoder that uses multi-head self-attention to learn dependencies over time. A decoder with masked attention generates forecasts autoregressively, leveraging both prior outputs and the encoder’s representation, before a final projection yields predictions for the full horizon. Understanding this pipeline helps in selecting and tuning hyperparameters, deciding when to fine-tune, and diagnosing limitations (for example, whether a given variant supports exogenous covariates or multivariate targets).

These models simplify forecasting pipelines, lower the expertise barrier, and can perform well even with limited local data, while being reusable across datasets and tasks. Trade-offs include data privacy considerations, limited control over a model’s built-in capabilities and horizons, potential underperformance versus specialized approaches in some settings, and significant compute and storage needs. The chapter emphasizes careful evaluation to decide when foundation models are the best fit and previews the book’s hands-on journey: defining model boundaries, experimenting with multiple time-series foundation models, applying zero-shot and fine-tuning workflows to real datasets (including sales forecasting and anomaly detection), and ultimately benchmarking them against traditional statistical baselines.

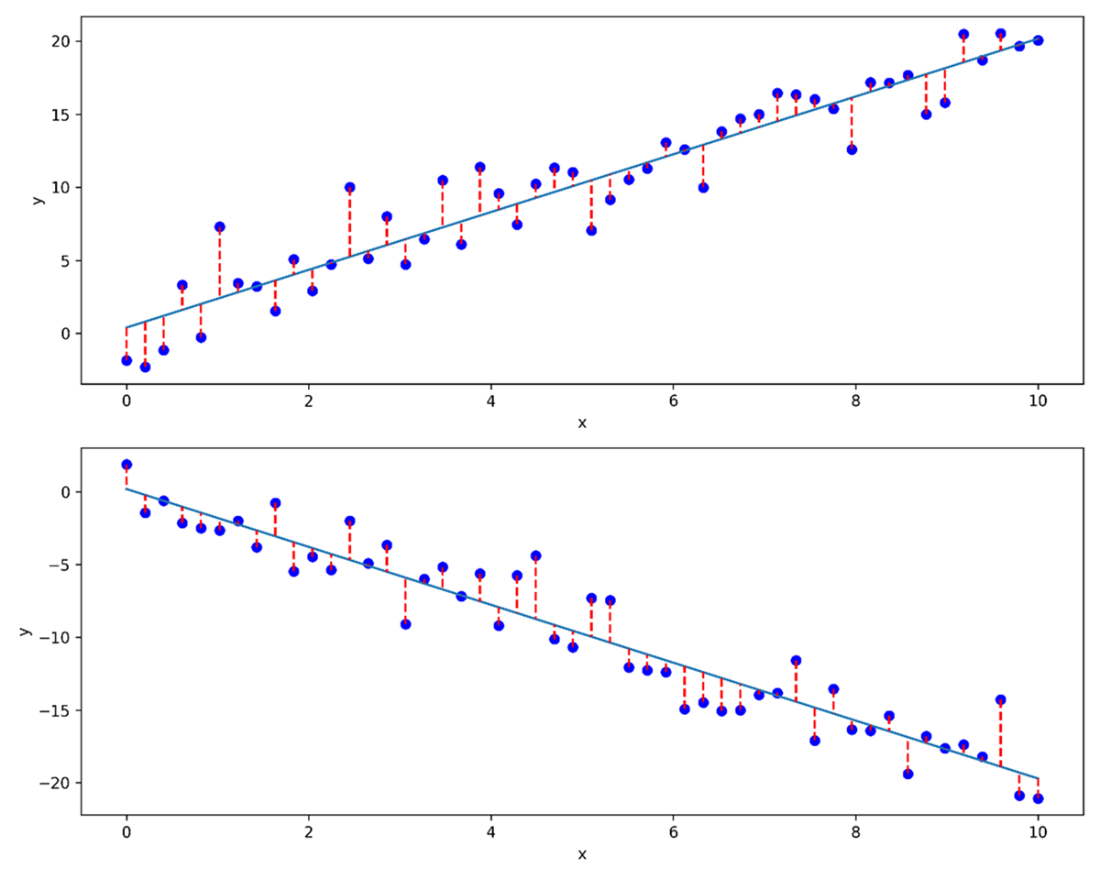

Result of performing linear regression on two different datasets. While the algorithm to build the linear model stays the same, the model is definitely very different depending on the dataset used.

Simplified Transformer architecture from a perspective of time series. The raw series enters at the bottom left of the figure, flows through an embedding layer and positional encoding before going into the decoder. Then, the output comes from the decoder one value at a time until the entire horizon is predicted.

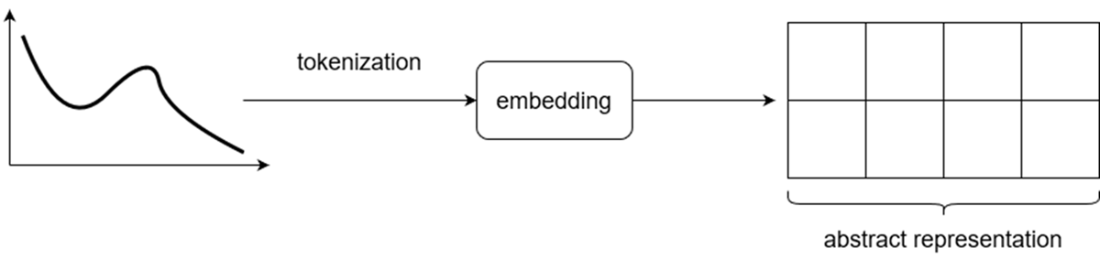

Visualizing the result of feeding a time series through an embedding layer. The input is first tokenized, and an embedding is learned. The result is the abstract representation of the input made by the model.

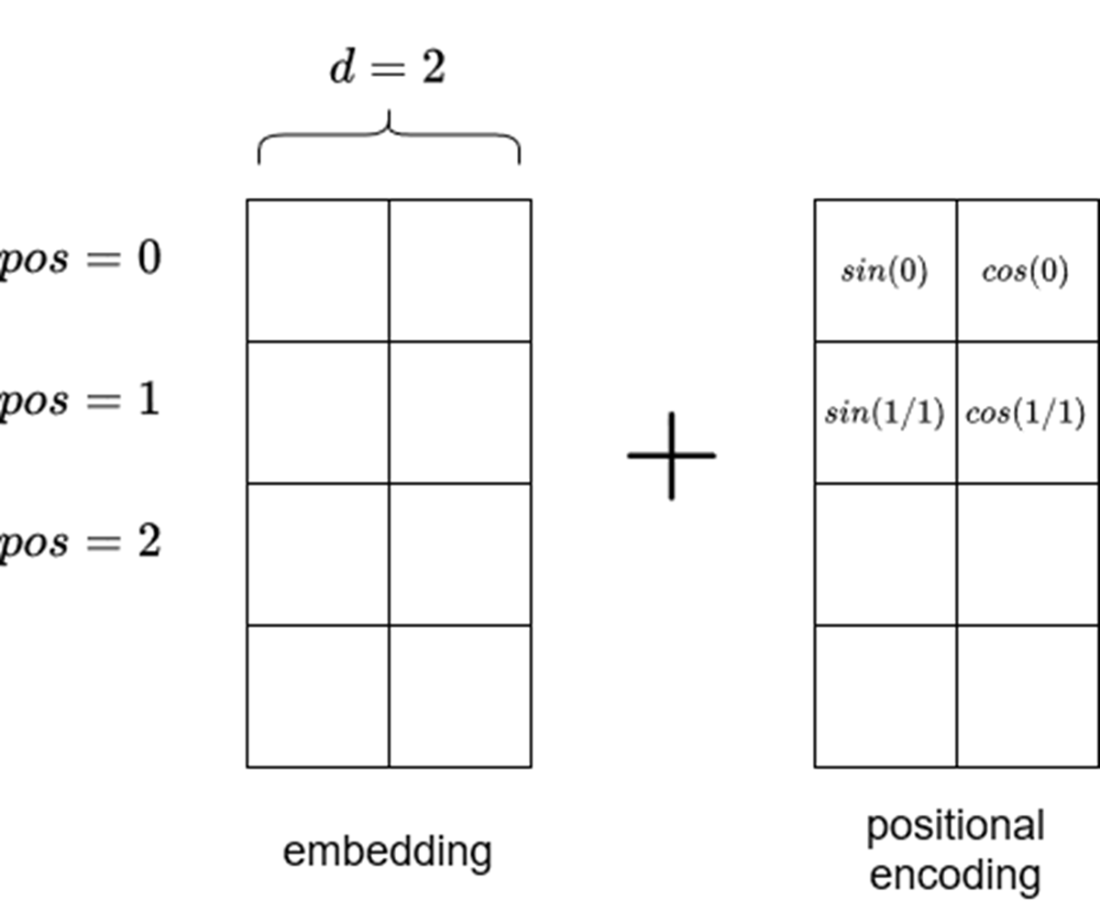

Visualizing positional encoding. Note that the positional encoding matrix must be of the same size as the embedding. Also note that sine is used in even positions, while cosine is used on odd positions. The length of the input sequence is vertical in this figure.

We can see that the encoder is actually made of many encoders which all share the same architecture. An encoder is made of a self-attention mechanism and a feed forward layer.

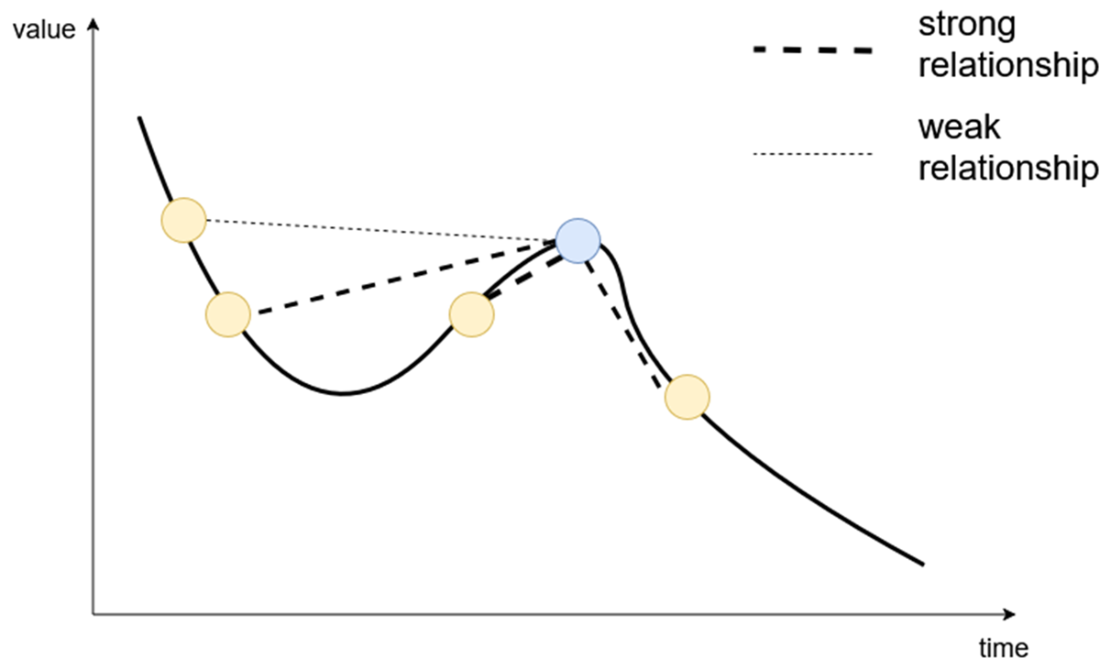

Visualizing the self-attention mechanism. This is where the model learns relationships between the current token (dark circle) and past tokens (light circles) in the same embedding. In this case, the model assigns more importance (depicted by thicker connecting lines) to closer data points than those farther away.

Visualizing the decoder. Like the encoder, the decoder is actually a stack of many decoders. Each is composed of a masked multi-headed attention layer, followed by a normalization layer, a multi-headed attention layer, another normalization layer, a feed forward layer, and a final normalization layer. The normalization layers are there to keep the model stable during training.

Visualizing the decoder in detail. We see that the output of the encoder is fed to the second attention layer inside the decoder. This is how the decoder can generate predictions using information learned by the encoder.

Summary

- A foundation model is a very large machine learning model trained on massive amounts of data that can be applied on a wide variety of tasks.

- Derivatives of the Transformer architecture are what powers most foundation models.

- Advantages of using foundation models include simpler forecasting pipelines, a lower entry barrier to forecasting, and the possibility to forecast even when few data points are available.

- Drawbacks of using foundation models include privacy concerns, and the fact that we do not control the model’s capabilities. Also, it might not be the best solution to our problem.

- Some forecasting foundation models were designed with time series in mind, while others repurpose available large language models for time series tasks.

References

FAQ

What is a foundation model?

A foundation model is a large machine learning model trained on very large and diverse datasets so it can be reused across many tasks. It typically has millions or billions of parameters, supports multiple downstream tasks, and can be adapted (fine-tuned) to specific use cases.How is a model different from an algorithm?

An algorithm is the procedure or recipe for solving a problem, while a model is the outcome of applying that algorithm to data. Using the same algorithm on different datasets produces different models—like using the same recipe with different ingredients yields different cakes.What are the key characteristics of a foundation model?

- Trained on very large, diverse datasets

- Contains a large number of parameters

- Applicable to multiple tasks

- Adaptable via fine-tuning

How do foundation models apply to time series forecasting?

A single foundation model can forecast series with different frequencies and temporal patterns (trends, seasonality, holidays) and may also handle related tasks like anomaly detection and classification. It can produce useful forecasts even without task-specific training and can be fine-tuned for better performance.What is the Transformer architecture and why is it used here?

The Transformer is a deep learning architecture built around attention mechanisms that capture complex dependencies efficiently. For time series, it uses an encoder to learn relationships in historical data and a decoder to generate forecasts, making it a common backbone for foundation forecasting models.What are embeddings and positional encoding in time series Transformers?

Embeddings convert raw time series tokens (values or windows) into dense vectors the model can understand. Positional encoding (often sinusoidal) is added so the model knows the order of observations—critical to avoid “seeing the future” when learning from past data.How does self-attention help with time series?

Self-attention learns how much each past token should influence the current step, capturing dependencies across time. Multi-headed attention lets the model focus on different patterns simultaneously (e.g., trends in one head, seasonality in another).How does the decoder generate forecasts, and what does masking do?

The decoder uses masked multi-head attention so it cannot access future information, generating one step at a time and feeding each prediction back to produce the full horizon. It also attends to the encoder’s output to leverage the learned representation of the history.What are the advantages of using foundation models for forecasting?

- Out-of-the-box pipelines with minimal setup

- Lower expertise barrier to get started

- Works even with few data points

- Reusable across tasks and datasets

What are the limitations and when might a custom model be better?

- Privacy concerns with hosted/proprietary models

- Limited control over capabilities (e.g., horizon limits, lack of exogenous or multivariate support)

- May not be optimal for a specific niche use case

- High storage and compute requirements (though APIs can reduce overhead)

Time Series Forecasting Using Foundation Models ebook for free

Time Series Forecasting Using Foundation Models ebook for free